AWS / EPOCH OPTIMIZER [2023]

With climate denial on the wane, former denialists have transitioned to portraying sustainability as sacrifice.

The individuals and corporations trying to slow efforts to electrify, to shift to renewables, and to better our relationships to the built environment, transport, and food, are attempting to convince the public that a greener future means giving up comfort, expediency, and pleasure. That’s a powerful argument to the weakly engaged.

For people who don't confront these topics every day, it's enough to hear these ideas a few times to be left with images of range anxiety, bland tofu, and an electric coil where the gas range used to be.

At best, to the casual observer, the conversation around climate change might seem like a grey world of actuarial minutiae like carbon offsets and regulation and taxes. Not inspiring stuff.

For the 2023 AWS re:Invent conference Sustainability Showcase, we wanted to explore how generative AI could help us envision sustainability transformations as something desirable. We wanted to create images that answer the question:

"What if we made different decisions with the same resources?"

Using the foundation model APIs at Amazon Bedrock and the AWS's new Inferentia and Trainium compute instances, we created a series of high-resolution 'start plate' images representing recognizable real-world conditions from the status quo. We then built a layer cake of diffusion inference techniques including fine-tuned models, custom LoRAs, textual inversion, and layered ControlNets to gradually inpaint, in real time, a plausible 'after' scenario in its place.

Where SDXL was the visual medium, our hand-rolled Claude chatbot, running on the Bedrock backend, was our creative partner, churning out hundreds of ideas to explore.

In the end, we created dozens of these scenes, including:

A festive table laden with meat-heavy dishes ➝

A sustainable feast of beautiful seasonal vegetables and grains

A typical American gas station ➝

A biophilic charging oasis with shaded seating, lush landscaping, and a cafe

A typical American 'stroad' ➝

A solar-harvesting protected bike lane adjacent to light rail, making a walkable community

A dense monoculture industrial greenhouse ➝

A vertical aquaponics facility growing a variety of crops

A diesel bus, idling in a cloud of smoke ➝

A sleek electric bus charging wirelessly through its bus stop

A series of flat, barren urban rooftops ➝

A connected series of lush green roofs shaded by solar pergolas

The physical embodiment of the installation was a larger-than-life high-resolution LED display creating these transformations in real-time throughout the run of the re:Invent conference.

There are some fast-moving GIFs on this page showing condensed examples of these transformations in motion, but, as always with our work, the real thing plays out at a more contemplative pace, inviting the viewer to closer inspection.

Technical effort aside, much of the labor in this project was dedicated to understanding the texture of the foundation models we worked with — sustainability has an aesthetic, and that bias in the training material can make some AI-generated portrayals of sustainable outcomes feel unreachably utopian, or, disappointingly, bourgeois and expensive. We didn't want 'only for the rich', or 'green fantasy', or replacement, or 'distant future', we wanted 'today, only with different priorities'.

For more on the conceptual foundations of the project, check out this long-form interview about the making of Epoch Optimizer with TouchDesigner makers Derivative.

Thanks very much to AWS for the faith and support in seeing this project through, and for putting sustainability on the stage at re:Invent.

All images on this page are AI-generated with the exception of the photos of the installation itself.

_______________________________

AWS: EPOCH OPTIMIZER

Client: AWS

AV Support: Fuse

Special thanks:

comfyanonymous,

Dr.Lt.Data,

Ferniclestix,

Elburz Sorkhabi,

Tristan Valencia,

Eric Alba,

Dennis Schoeneberg

Built with:

AWS

TouchDesigner

Stable Diffusion

GOOGLE + DEEPLOCAL / GOOGLE WOOGLE MAKER [2022]

Nothing is juicier than an open-ended brief for a generative art moment centered on color. Just thinking about it calls to mind a million ideas, aesthetics, methods, references, and techniques.

Deeplocal worked with Google on a hero exhibit for their Chelsea store, starting with a deck of juicy, candy-colored, bubbly, translucent, squiggly, squishy reference, and a focus on personalization. Just enough agreement on style to narrow the field, and just enough variety to leave it wide open.

From a previous installation, Google already had a wraparound three-screen display, extended by a low-resolution array of LEDs nestled in a floor-to-ceiling cove of frosted glass tubes. The refresh, timed to coincide with the launch of the latest Pixel phone, was to use the Pixel as both a camera and as a controller for a generative art moment.

The phone was to capture an image of the user, extract the dominant colors from the image, and begin generating a one-of-a-kind art piece with a palette derived from the picture. At the end, an onscreen QR code offered wallpaper-formatted captures of the unique artwork for the user to take away. (Try scanning the QR on this page!)

For the project, we explored a handful of camera-based interactions for a variety of colorful, abstract, mirrored, dichroic, chromey, confetti, bouncy, squishy visual directions (a few rejected ideas pictured at the bottom of the gallery), eventually landing on a worm / tube / squiggle Google named the ‘Woogle’.

Our front end system consumed a palette and state change commands from the phone and middleware layers, using those elements to drive a high resolution, smooth-as-silk Woogle experience for hundreds of customers a day for a run that continued, by popular demand, long beyond its initially-scheduled window.

_______________________________

GOOGLE: WOOGLE MAKER

Client: Google

XD, Software, Systems - Design & Implementation: Deeplocal

Generative Art Moment / Snek: Noah Norman / Hard Work Party

CHEESE [2022]

In 2022, fascinated by cutting-edge diffusion fine-tuning techniques, we joined forces with a small team of developers and dreamers to make Cheese, an entirely new kind of social network that asks - and answers - the question:

‘What if social media were

actually,

literally,

truly fake?’

All profiles on Cheese are populated exclusively with AI-generated images. Users have a private ‘camera roll’ of text-to-image generations from which they can choose to post publicly.

On the home feed, users can choose to hit a ‘Do Me’ button on any image they see, getting variations based on the same prompt but with their own likeness as the subject. Prompt creators are incentivized with app credits for every time someone ‘remixes’ their prompt.

When we put Cheese in front of users, people lost their minds - and weeks of their free time - to pure creative joy. Here’s what we heard:

”I can’t stop scrolling.”

”This is the most addictive app I have ever used.”

”This is the only social network I’ve ever been interested in.”

”Just lost a week of work to Cheesin’.”

”I’m hooked on that Cheese.”

”It’s going to start WWIII but it’s great.”

Of course, when designing a system like this, privacy and safety were top of mind. Our rules and terms were carefully considered to codify that users should own their likeness, and should be in full control of a model that is capable of making images of them.

• Users’ generations remain private until posted

• Users own their posted images and AI model

• Zero-tolerance policy for abuse

• Cheese can only generate images with users’ models for internal testing

We barely scratched the surface of our dream roadmap with this experiment. If you want to know more about AI image-making or our visions for AI-powered creativity, hit this contact link.

To read more about the thinking behind Cheese’s interaction design and other related projects, check out our blog post on Generative AI in Production.

Cheese Team:

Product Concept and UXD: Noah Norman and Stephanie Lopez

Lead Dev: Paul Szerlip

Backend and Front End Dev: Evan Lee

Biz Advisor and Blue Ocean Research: Eric Rachlin

ROSETTA HALL [2019]

After dinner service, Boulder’s Rosetta Hall does a neat trick. The opulent brass and marble food hall transforms in the space of a moment into a grown-and-sexy nightclub. Two years after opening, that moment still gets a cheer from the crowd.

Our conversations with Rosetta’s owners began before the renovation of the historic downtown theater broke ground, at a time when the project’s identity was just taking shape, and when the reflected ceiling plan was still about a year off.

The request was for a system that would make an elegant statement while providing daylighting in an elevated, European-style food hall environment … but then drive the vibe as a full-on club lighting system after hours.

We knew from experience that the best way to make a large impact in a space of this size was to make a distributed system, and that the best way to make a limited budget stretch was to make a system of modular, repeated components.

With that brief, we decided on the triangle as the fundamental element of the two Platonic solids: the octahedron and the icosahedron.

With one custom triangular, 16-bit 15-node RGBW LED PCB we were able to make 20 pendant lights of aluminum and acrylic that provide circadian-adapted daylighting, and by night flex 3D, volumetric, UV-unwrapped, audio-responsive dynamic looks that consistently evoke gasps from the crowd when the dinner-to-club changeover goes down.

The process of integrating the system with the building involved extensive pre-visualization in VR, close collaboration with architecture, interior design, and MEP teams, and a detailed mounting package capable of adapting to consistently changing as-built conditions throughout the construction process.

Our system is entirely autonomous, driving not just our pendants but every lamp in the entire building, including those in the bathroom, in the lobby, and in the kitchens, making the circadian daylighting subtly seamless and the nighttime party looks completely immersive.

With all this firepower, you’d be forgiven for expecting a lot of color and flashing lights, but we brought our signature hyper-minimal approach to designing the durational, generative looks that take months to play out in full.

Our philosophy is that permanent installations should withhold their most exciting and splashy moments for the rarest of occasions, instead exhibiting subtle and nuanced behavior that invites and rewards closer inspection, hinting at a complex inner life.

_________________________ROSETTA HALL: INTERIOR STELLATION

Created by: Hard Work Party

Creative Direction, Technical Direction, Software, Animations: Noah Norman / Hard Work Party

Fabrication: Leo Zacharias / Modular Fabrications, Ltd.

LED Engineering and Manufacturing Supervision: David Crumley

Architectural Integration and Mounting Systems: Ben Gray / Grayscale Studio

Installation Dream Team: Noah Norman, Ben Gray, David Crumley, Leo Zacharias

Control Surface: Chuck Reina / Astounding Company

Genius-Level Early Software Help:Landon Thomas

Major Thanks for Rubber Ducking, Get Out Of Jail Free, and Tough Spot Solves:Elburz Sorkhabi

Some photos courtesy of: Rosetta Hall and Alexa Hamed

Built with love and TouchDesigner.

NETFLIX + AV&C / INVISIBLE FRAME [2021]

There’s something beautiful about a simple brief. This one was almost as simple as it gets:

‘Netflix wants to make a billboard disappear.’

For a brand new all-LED billboard thrust into the vibrant sky above LA’s Sunset Boulevard, the goal was to make the physicality of the thing vanish, for the content to seemingly float, tacked invisibly to the peaches and whites and teal gradients of the California sky behind.

For AV&C and partner Daktronics, who boldly said yes to what was in reality a pretty crazy idea, we built a 24/7/365 automated self-color-correcting video pipeline that made the thin borders of the giant display vanish into the air, against, it seemed, the will of science itself.

Many said it couldn’t be done, and they should go out to Sunset Boulevard and bring a crow to eat and send us a picture of them eating it, because we did it.

To be totally fair, this was a quixotic mission. The type of PTZ cameras that can withstand 24/7 operation aren’t famed for their accuracy and don’t sport the kind of ultra-precise controls one would want for a task like this, and the kind of LED displays they install for roadside billboards are similarly not instruments of great subtlety.

But there was a great amount of will, so we found a way, using truly exotic LED color science, arcane camera control APIs, grilling the LED manufacturers at length about edge cases and undocumented behavior, astronomical calendric automation, and endless testing through countless sunsets and sunrises in Pennsylvania, South Dakota, and LA.

Drive East on Sunset, do a double-take, and think of us.

_______________________________

NETFLIX: INVISIBLE FRAME

Client: Netflix

XD, Software, Systems - Design & Implementation: AV&C

Technical Direction: Noah Norman / Hard Work Party

November, 2021

Made with love and TouchDesigner.

TWITCH / ELSEWHERE SOUND SPACE (2021)

Elsewhere is a beloved Brooklyn music institution and the heart of a sprawling and inclusive community of emerging music fans. As of April 2020, the COVID crisis had effectively shut down live music performance in NYC, and while many organizations have turned to streaming to try to deliver facsimiles of the experience of seeing music live, our partnership with Elsewhere focused on imagining an entirely new medium in which to experience live music in the most interactive, internet-first way possible.

Nowhere does this approach make more sense than Twitch. With their support we dreamed up Elsewhere Sound Space, a comedy sci-fi space spa cult music show with an improvised, nonlinear format driven by bidirectional communication between the talent, the software, and the audience.

To make it happen, we designed and built a hybrid uber-application and COVID-safe studio in the quiet halls, lobbies, and coat check of Elsewhere itself.

The production process for every episode involves a blank sheet of paper and an attempt to break down conventions and subvert expectations while winking at tropes from traditional storytelling.

The fourth wall is transparent on Twitch and our dynamic, software-enabled visual storytelling allows us to interrogate the show-viewer relationship in new ways every time we build a scene.

When designing the systems and software for the program, we approached every touchpoint as a distinct user experience design task. On-site our users include the host, the guest, the technical director (us), the call-in producer, and the sound tech. Online our users are the viewers watching live on Twitch.

Every user’s needs, sensory bandwidth, and interaction capability profiles differ based on their role in shaping the experience, and thus every user’s interface to the show is entirely custom and evolves rapidly with the changing capabilities and needs of the show.

The program offers viewers the ability to influence the course of the plot through two flavors of voting, by purchasing attention from the host via the channel points system, by using keywords in the chat, and by contributing bits (cheering) to progress towards comedic moments onscreen.

For a more in-depth discussion of interaction design for the show, check out our blog post on the topic:

Mass Interaction Design: One to Many to One

In the host’s space, a teleprompter-like heads-up interface gives realtime information about users’ interactions, messages in the chat, new subscribers, prompts from backstage, and bit contributions, while displaying run of show information and a view into the guests’ space.

In their studio, the guest is flanked by four screens displaying the chat, the live program feed, a mirrored view of the guest themself knocked out of their chromakey background, and messages from the technical director.

Among the growing list of features of the core application that drives the show are:

a full-featured scene-building production video switcher

realtime green screen and face-tracking

interactive special effects

use of the Twitch API, PubSub, and ICQ chat architectures to enable dozens of forms of fan interaction

an omniscient and eerily timely ticker

dynamic and interactive titles, graphics, and credits

3D ‘virtual production’-style sets and cartoonish 2D compositions featuring incredible artwork by Dark Igloo

squishy, tactile interactive spaces

dynamic physical control surfaces for host and technical director

on-set system-controlled practical (physical) gags like a receipt printer and motorized candy machine

live video callers composited into 3D scenes

chat-bots

live control of music playback and sound effects

etc. etc.

Elsewhere Sound Space aired monthly on Twitch in early 2021 to live audiences of over 10,000 and has been covered in:

Harper’s Baazar

Billboard

Time Out

Derivative

Brooklyn Magazine

BK Reader

The Hype Magazine

Musical guests included Princess Nokia, Starchild and the New Romantic, Paperboy Love Prince.

_______________________________

Elsewhere Sound Space

Created by: Elsewhere Studios x Hard Work Party

Technical Direction,

Show Software,

Realtime Visuals:

Noah Norman / Hard Work Party

Art Direction: Dark Igloo

Creative Direction: Jake Rosenthal (Elsewhere) & Noah Norman

Produced by: Jake Rosenthal (Elsewhere)

Creative Writing:

Ashok "Dap" Kondabolu, Dark Igloo, Peter Smith, Noah Norman

Studio Direction: Chris Madden

Sound Design: Chris Madden

Live Editing: Noah Norman / Hard Work Party

Live Sound Engineer: Alex Slohm

Production Manager: Alex Pacheco

Media Strategy: David Garber

Prop Styling and Production Assistance: Turiya Madireddi

Music Licensing: Rachel Byrd

Talent Booking: Rami Haykal (Elsewhere)

Production Consult: Chris Willmore

Still Photography: Luis Nieto-Dickens

Twitch Glue Code: Charles Reina

Special Thanks:

Will Adams, Jesse Ozeri

Elburz Sorhkabi, Charles Reina

Dandi Does It,

Lauren Brady, Samuel Bader

Landon Thomas,

elekktronaut, paketa12

Built with love and TouchDesigner.

ENTROPY [2021]

In 1969, Alvin Lucier recorded the sound of his voice, then played it back in the same room. Over and over, until all that remained was not his voice, but simply the drone of the most resonant frequencies in the room.

In 2021, we wondered what AI models would do if you fed them their own output, over and over, until there was nothing left of the initial prompt that kicked off the chain, and all that remained were the hallucinations of the AI.

Using then state-of-the-art generative AIs VQGAN+CLIP and GPT-3, collaborating pseudonymously as NA1 with illustrator and programmer Chuck Reina (under the name ZNO), we created a set of machine-hallucinated playing cards.

Each of the 50 decks of 60 cards was the result of a recursive process, feeding the previous cards in the deck back into the system until narratives, characters, histories, compulsive tics, and in-jokes emerged. The images were also made recursively, resulting in a flip-book appearance.

The theme of the collection was Entropy, thematically aligning the force of dissipation against life's tendency toward order.

In the world of the project, the cards were the result of a failed automation initiative at a major collectible card game company. In the eyes of the commissioning executives, the increasingly bizarre and unhinged output of their recursive AI system was unusable, so they scrapped the project and disconnected the machine that created it.

But an employee of the company became convinced that the AI was sentient, and in an effort to raise awareness of the machine's plight, she smuggled the cards to a local comic shop owner to sell at retail.

The website for the project featured a brilliant mini comic by ZNO and a classic 90s-style RPG interface for interacting with Ian, the comic shop proprietor, and for flipping through the cards in the store binder.

In addition to the high-resolution card images, we made poster-sized contact sheets and animated gifs for each deck.

To read more about the ideas and process behind the project, check out this this thoughtful writeup/interview with NA1 in nftnow.

Card Copy and Artwork: The Machine

Card Copy and Artwork Code, Masters Posters: Noah Norman / NA1

Data Management and Curation Tools, Card Background, Back, and Symbol Art, Ian, Entropy Website, Entropy Comic Book: Chuck Reina / ZNO

Acknowledgements:

Esser, Baumbach, and Ommer: VQGAN

OpenAI: CLIP and GPT-3

Katherine Crowson and Ryan Murdock: VQGAN+CLIP technique

HBO + THE MILL / LOVECRAFT COUNTRY: SANCTUM [2020]

HBO’s Lovecraft Country was a genre-defying fantasy-horror that dealt with serious issues in a surreal way. To promote the show, The Mill and HBO dreamed up a COVID-safe series of virtual ‘tours’ through an exquisitely-crafted liminal VR space, populated by site-specific Afro-futurist sculptures by Black artists including David Alabo and Adeyemi Adegbesan, tied together with a narrative voiced by the actors from the show, and capped off by a performance from Janelle Monáe.

The invited guests shared the space in groups of roughly 20, inhabiting gorgeous and surreal bodies of their own choosing, roaming freely through the virtual spaces, and interacting with one another and puzzles embedded in the spaces around them.

For the larger audience at home, the experience was streamed from the first-person vantage of an anonymous participant: a VR cinematographer. The streaming audience had their own layer of interaction available, where onscreen riddles invited viewers to guess answers in the YouTube chat, and the correct answer would invoke a ‘spell’ in the viewer’s name and take over, warping and bending the entire stream for a short time.

For this project, HWP helped The Mill design and engineer a streaming architecture and custom software that handled live audio/visual effects, multi-source switching, playback, recording, live titles, and streaming. We also created a smokey signature title effect and dozens of A/V ‘spells’, about 6 of which ended up being used in each of the 2020 live streams.

The project was timely and spectacular, earning over 100 press features and 13 million social impressions in the first week alone.

_______________________________

HBO + THE MILL: LOVECRAFT COUNTRY: SANCTUM

Client: HBO

Brand: HBO

Production Company: The Mill

Creative Director: Rama Allen

Executive Producer: Desi Gonzalez

Experience Director: Aline Ridolfi

Design Director: Sally Reynolds

Technical Director: Kevin Young

Senior Producer: Hayley Underwood Norton

Strategist: Min Wei Lee

Developer: Kim Koehler

Streaming and Live Graphics System Design, Custom Software, Realtime Graphics: Noah Norman / Hard Work Party

September, 2020

INDY 500 (2021)

For 2021’s Indianapolis 500, title sponsor NTT wanted to show off the power of their Smart Platform by using the thousands of streams of data coming off the car and track instrumentation to provide altogether new insights into the strategy of Indycar racing. These data visualizations needed to work for the 300,000 fans in attendance, on the colossal LEDs on the ‘Pagoda’ in the center of the track, on the LCDs distributed throughout the stadium, and on the TVs, computers, and mobile phones tuned into the Indycar YouTube channel during the race.

Prior efforts had focused on what we call ‘marching ants’ visualizations of the progress of the cars on the track, an approach that provides little to no additional context and really serves only to strip the excitement out of the race.

We saw an opportunity to help veterans and new fans alike understand the big picture strategy that informs decision-making on the track.

As always, our approach began with interviewing subject matter experts and stakeholders. We had conversations across the Indycar and NTT teams to build intuition and broaden our own understanding. With insights gathered from that research and from a deep, deep, deep dive into the texture of captured data from past races, we looked for new ways to tell the stories in the numbers.

The resulting discoveries and the visualizations they produced succeeded wildly, with even Indycar executives expressing surprise at how previously-nonexistent metrics added new context to the metagame they run.

_______________________________ INDY 500

Design and Code: Hard Work Party

Creative Agency: Mighty&True

Client: NTT

Photos Courtesy of: Hal Lovemelt / Mighty&True

Some screen caps pictured here were made in testing with prior race data and placeholder images, so if you’re an Indy fan and something seems amiss, good eye!

Built with love and TouchDesigner.

ACCOR + AV&C / SEEKER (2018)

While technically a video shoot, Le Club Accor Hotels’ Seeker project brought together all the challenges of a live-to-tape event, live special effects, and several interactive digital media moments into one seamless multi-camera experience for a series of influencers and visitors from the press.

The premise, conceptualized and directed by The Mill, was to use biometric indicators to gauge guests’ reactions to a series of prompts, and to use those indicators to suggest an appropriate vacation destination from the Accor portfolio.

For this project, working for AV&C, we provided technical direction services, overseeing dynamic content on acres of curved LED walls, water gags, ferrofluids, multiple custom fabricated elements, and the choreography necessary to make it feel seamless again and again to the talent seeing it for the first time.

We also provided interaction and graphics development services, creating the ‘hero’ moment that asked the users to improvise movements in concert with a constellation of burning embers in an immersive environment.

This project was especially fun because it brought together some of our favorite collaborators from multiple teams for a few hectic but fun days to make something we knew The Mill would knock out of the park.

Client: Le Club Accor Hotels

Agency: The Mill

Spatial Design, Animation, Show Control, Effects: AV&C

Sound Design: Antfood

Technical Direction, Realtime Graphics and Interactive Development: Noah Norman / Hard Work Party

NYCB ART SERIES / SANTTU MUSTONEN (2017)

The New York City Ballet Art Series is a continuation of the work started by New York State Theater architect Philip Johnson and NYCB founder Lincoln Kirstein, a curatorial tradition dating back to the theater's founding in 1964. Work in the space includes a permanent collection of works featuring some by Jasper Johns and Lee Bontecou, and more recently, as part of the annual Art Series program, installations by Dustin Yellin, FAILE, and JR.

For their 2017 installation, the fifth in the new Art Series, NYCB commissioned Finnish artist Santtu Mustonen, whose work begins as paint and is presented here as digital animations that flutter and breathe like living brushstrokes.

For the 6-week installation, Hard Work Party provided technical direction, spec'ing playback hardware, projection, and lighting, as well as providing programming services, creating a custom, scheduled playback platform for the 4-channel video, accompanying audio, and lighting animations.

We worked closely with AV integrators SenovvA, who provided projection design and rental support, fabricators Gamma NYC, who designed, built, and rigged the screens, and Santtu's representation, Hugo and Marie, who coordinated the effort.

The scale of the finished piece is colossal. The projection geometry is borderline preposterous (we surprised even Panasonic with this one). The bang for the buck is stupendous (hat tips all around). The result is at once awesome and subtle.

Because of the scale and complexity of the projections, as well as the audience proximity to the screens, we used HAP Q, an open-source codec whose compression quality and performance were a must for this project. The compression allowed us to play back high-quality 4K video from an inexpensive commodity PC without losing the dynamics and subtlety of the busy and textured particle system.

For command and control, we used TouchDesigner. TouchDesigner's flexibility allowed us to continually grade the video playback for changing lighting and projection conditions, as well as to calibrate the smart lights used to illuminate the statues, both in terms of focus and color quality. Critically, TouchDesigner also allowed us to accommodate the ballet's scheduling needs, facilitating automatic projection, lighting, and sound strike and kill commands throughout the 6-week run without the use of external scheduling hardware.

Santtu's work shines, and his 14-minute composition feels painterly, austere, and delicate writ large in the promenade. We're very proud of this one.

Production Company: Hugo and Marie

Artist: Santtu Mustonen

Producers: Jason Scott Rosen and Masha Spaic

Technical Direction, Systems Design, and Programming: Hard Work Party

AV Integration and Projection Design: SenovvA

Screen Fabrication and Rigging: Gamma NYC

Animation: Johnny Lee

Special thanks to Marquerite Mehler, Karen Girty, and Jill Jefferson at NYCB

Some photos courtesy of Alex Di Suvero and Hugo and Marie

JOHARI WINDOW [2022]

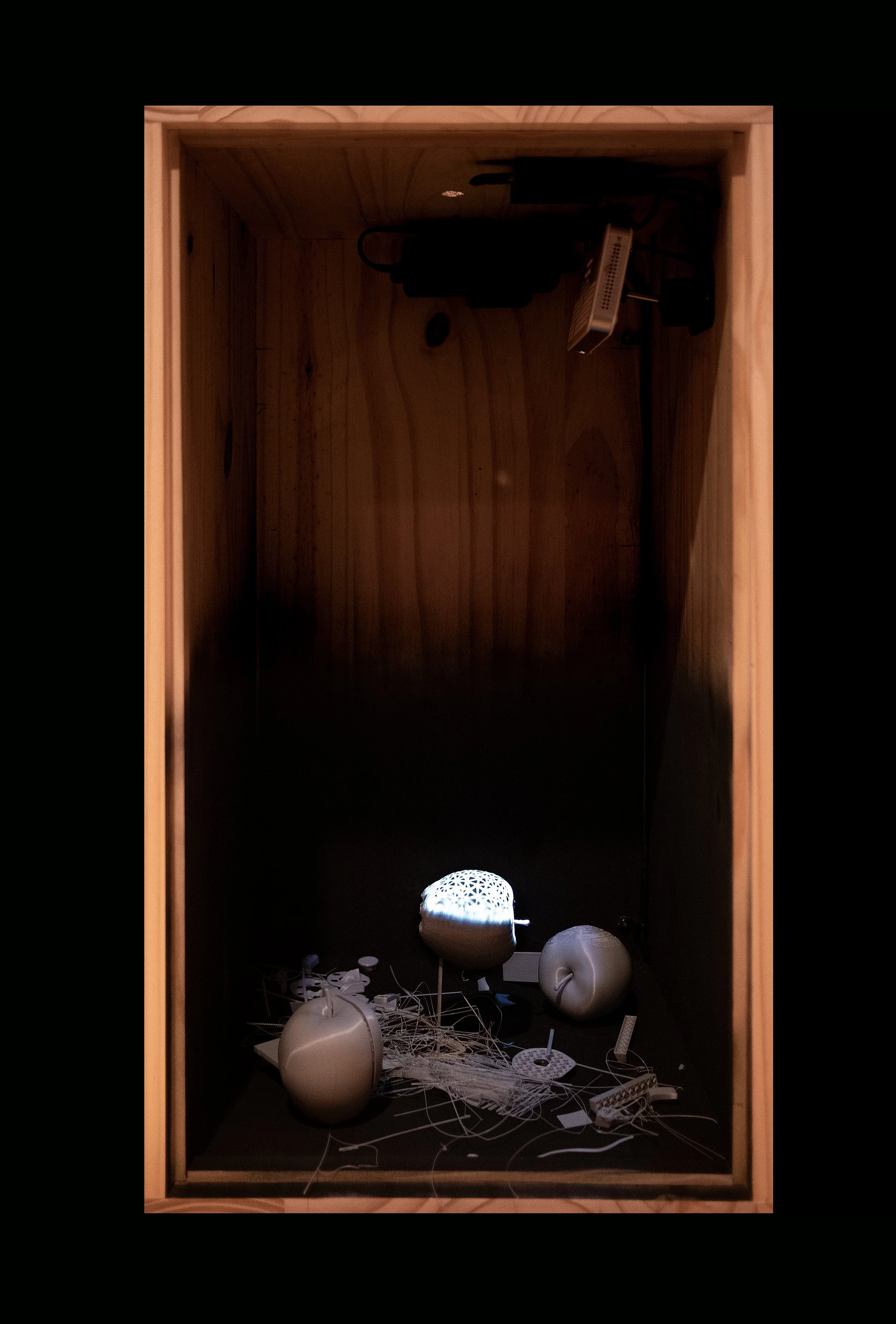

Pine, PLA, MEMS laser projector, spraypaint, media player, custom software

The Johari Window is a heuristic for understanding one’s feedback/disclosure model of self-awareness.

On top of the enclosure is a peep hole, centered on the one of three apple shapes without visible imperfections. The electronic components of this work are invisible from this perspective, and the projected animation illuminates the ‘finished’ apple with a sequence of textures.

First we see a realistic apple skin, which in time appears to be peeled away to reveal dull and featureless grey metal, which is then peeled to reveal a wireframe, and so on.

This piece is displayed with its accompanying door seemingly torn off, laying on the floor beside the box. From the vantage of the open door, the projection reads more as a spotlight, and here our eye is drawn to the detritus surrounding the ‘subject apple’ - a pile of cast-offs, threads, support material, and partial prints.

Johari Window quadrant image courtesy of Wikipedia.

KORS + AV&C / WALKBOX [2017]

For Michael Kors’ Shanghai ‘The Walk’ influencer event, NYC creative agency THAT envisioned a photobooth writ large — a three-camera shoot moving down a 50’ walk against an LED wall placing the subjects in scenes shot on location around the world.

THAT wisely tapped NYC tech wizards AV&C, who knew in order to produce an edited multi-camera video, set to music with titles, every 60 seconds for 5 hours straight, they needed an inhuman editor.

For that they brought on Trash TV, a still-stealth startup developing two dovetailing machine learning softwares - one to tag video, one to edit. That provided a crucial piece of the puzzle - a near-instantaneous edit.

Beginning with Trash’s software as the cornerstone, Hard Work Party provided technical direction on a high-bandwidth humans-and-machines video pipeline, beginning at a user-centric check-in and stage management GUI, proceeding through an almost constantly-rolling live stage, onward to capture, ML edit, human edit pass, QA, transcode, and handoff.

The team created a stateful process management system, one where each users’ progress was managed from a handful of customized interfaces like the check-in iPads, a REST API for the automated stages, and browser-based GUIs for the postproduction team.

On the front, that user-centric flow dovetailed with a stage-focused state machine, handling video playback, video capture, lighting, and projection on a downstage scrim to cover resets, all driven seamlessly by the stage manager’s tablet.

Making all that possible - the pipes - was a stack of high-availability storage with the capability to serve up 32 concurrent streams of ProRes 422 at 1080 while keeping realtime sync with a hot backup. While invisible, that combination of storage and redundancy was critical to making it possible to deliver 273 30-second videos in 5 hours.

The system was seamless and invisible, the videos were viewed by millions, and the event was nominated for a Webby and covered in WWD, Vogue, and probably a billion Chinese-language publications.

Client: Michael Kors

Agency: THAT

Experience, Software, Systems - Design & Implementation: AV&C

Technical Direction: Noah Norman / Hard Work Party

Machine Learning Edit Systems: TRASH

Honors:

Nominated: Webbys 2018

November 15, 2017

KEXP (2016)

KEXP is more than a radio station - it's an institution, for both Seattle and for music fans worldwide.

The public station is known not just for its radio or web stream broadcasts, but increasingly for its polished and intimate video recordings of live in-studio performances from legends and up-and-comers alike.

In its former, more modest studios, KEXP's rather small live performance room became known for the christmas lights twinkling in the background bokeh.

Recently, through the largesse of its fans and a number of supporting organizations in the Seattle area, KEXP was given entirely new, updated facilities, including a vastly upgraded live performance room. Along with the new space, it was time to upgrade the look.

For Listen, in a project supported by Microsoft as part of their Music x Technology initiative, we teamed up with LED engineer and technical director David Crumley and our strategic partners Slanted Studios to create a wraparound low-resolution LED wall with custom software to drive it.

The system is, at its heart, driven by TouchDesigner, which is used to generate about 15 custom animations - a mix of particle systems, 3D geometry, fluid simulations, and basic shader approaches, with some looks using the Kinect to capture and animate performers' movements. All are controlled by a Surface tablet from which the team can change any looks' colors, speed, brightness, and masking on the fly.

The LED display itself is a custom-manufactured system to Crumley's spec, permanently installed into the structure of the room. Inherent in the system design was an added challengeportunity (opporchallenge?) in that alternating LED columns were offset by 6" both vertically and in depth, creating opportunities for parallax effects and challenges in signal generation and previs.

After a successful kickoff performance in January 2017, we look forward to seeing what the KEXP team do with the system and seeing the twinkle in the background of their videos for years to come.

Production Companies: Hard Work Party & Slanted Studios for Listen and Microsoft

Producer: Jen Vance

Technical Direction and LED Engineering: David Crumley

Software Development and Realtime Animations: Noah Norman and Michelle Higa

Additional Animations: Michelle Higa and Terra Henderson

Surface Programming: Charlie Whitney

Jan 11, 2017

Photos 1 and 4 [the good ones] by Amber Knecht for KEXP

VIACOM / COMPOSITION VI (2015)

Interactive Installation for 12 Players

(2) x UHD Video Walls

TouchDesigner, Kinect, Nvidia Quadro K6000, Custom Server Hardware

Composition VI is a site-specific artwork installed in the lobby of Viacom's Times Square building at 1515 Broadway.

The piece was the result of a series of independent creative iterations, beginning with meditative live image manipulation and eventually arriving at this complex force and particle system using Kinect input to drive interaction.

As visitors move through the lobby space, their paths and body movement are flattened into an orthographic space, spanning both massive video walls across the lobby, rendered as directional flechettes, and painted with a dynamic color system.

A sophisticated rule set, refined through extensive user testing, assigns attractive and repulsive forces and particle emitters to users' heads and hands. The result is a balanced system that responds immediately to passersby, welcomes initial exploration, and yet rewards long-term play and engagement.

We created this piece with our old pals and collaborators Slanted Studios and we're already looking forward to more fruitful collaborations and wondering why we haven't been doing this stuff together all along.

This piece was commissioned by Viacom's Catalyst group, supported by Art at Viacom, and made with TouchDesigner.

Special thanks to Matt Hanson and Matt Heron at Catalyst making this possible. Thanks also to Kathryn Henderson and Ana Kim at Slanted for making it real, and thanks to our rad group of test subjects for all the arm waving.

Honors:

Nominated: Animated Com Award, Stuttgart Festival of Animated Film

Production Companies: Slanted Studios & Hard Work Party

Artists: Michelle Higa Fox and Noah Norman

Producer: Kathryn Henderson

Optimization: Mary Franck

Production Assistant: Ana Kim

Special Thanks: Kinda Akash, Sougwen Chung, Yussef Cole, Guillermo Echevarria, Stuart Fox, Manna Hara, Erin Kilkenny, Gabe Liberti, Stephanie Lopez, Guilherme Marcondes, Nika Offenbac, Gabriel Pulecio, Dave Rife, Jake Rosenthal, Daniel Savage, David Schmüdde, Stephanie Swart, and Ed Uberia

Viacom Catalyst:

Director of Screen Content: Matt Hanson

VP/Executive Producer: Matt Herron

Senior Vice President, Brand Strategy & Creative: Cheryl Family

Video Documentation

Producer: Kathryn Henderson, Jennifer Vance

DP: Chris Willmore, D. Schmüdde

Editor: Ana J. Kim

Music: Mokhov

October 13 – November 17, 2015

PITCHFORK + JACK DANIEL'S / DISTILLATION IN WAVES (2019)

NDA WORK

We've probably done more boss work under NDA than stuff we can publicize, but NDAs are a real thing and we respect them.

Still, though, under cover of darkness, we've designed massive video pipelines, retail display systems, interactive artworks, and LED lighting designs, what-iffed immersive school buses, spatialized multichannel audio for virtual reality, and ghost-written a thousand proposals in all the media there are and a few made-up media, too.

Names that have trusted us big time with time and money in a fashion we can't yet discuss include:

IDEO

Gensler

HUSH

Deeplocal

The Mill

Duolingo

AV&C

Motionographer

The Smithsonian

American Museum of Natural History

The Barnes Foundation

Johnson & Johnson

New Inc

Wired

Amazon

The Art Directors Club

NBC Universal / Comcast

NOTHING IS BORING

Nothing is Boring is an interview show that gets into the weeds. Some shows focus on bios or explainers — we focus on the chewy center of every interview.

Listening to this show is like language immersion; you might not understand it all but you'll feel your brain growing.

Wonder what mechanical vibration specialists discuss when you leave the room? How do you design for machine knitting? What kind of latex is hot in the VFX scene? Can mastering engineers hear clock upgrades?

Subscribe and jump in the deep end over and over.

GIPHY / LOOP DREAMS (2016)

Giphy is where the internet goes for GIFs, and, increasingly, where artists go to showcase original work in low-res, bite-sized loops. In 2016, for the first time, Giphy and Rhizome.org wanted to bring GIF art, art that shares the GIF aesthetic, and art by artists known for their GIFs, into meatspace.

This meant, in various cases, high resolution, large scale, projection mapping, virtual reality, and interactivity, sometimes all at once.

We were thrilled at the chance to shoot from downtown with our old pals Dark Igloo on this one, finding creative solutions to technical challenges like calculating weird projection geometry for trompe l'oeil orthographic mapping, jiggering retractable ceiling tethers for idiosyncratic controller setups, and building a GIF-playing basketball arcade game.

Thanks to Giphy, Rhizome, and Dark Igloo, Electric Objects, and Senovva for the alley-oop on this one. He's on fire!

GIFs and some images courtesy of Dark Igloo and GIPHY.

Artist's’ works pictured, in order from top to bottom:

Dark Igloo

Phyllis Ma

Zach Scott

Sam Rolfes

Dark Igloo

Yung Jake, Mattis Dovier, and Jess Mac

Nicolas Sassoon

Phyllis Ma

Karan Singh

ADIDAS / HARDEN (2016)

To promote the new James Harden Adidas shoe launch, Set Creative took over Footlocker's midtown Manhattan special event space NYC33 for a slam-dunk contest / 1-minute skills challenge.

The idea and the event came together quickly: invite ballers, high school players, bloggers, and influencers to jam on a heavily-stylized half-court and get branded, sharable videos in their hands as fast as possible after they walk off the court.

Front of house, greeters used our custom iPad app to check in players and simultaneously create database records for fitters to pull shoes and shirts, and to create correlated records for the post team to coordinate distributing the finished assets.

For the video shoot, we crewed up with a full film production team, a 5-camera rig (with jib and overcrank portrait cam), running ISOs to decks back of house where a 3-person post crew cut down edits. Simultaneously, our DP cut the show to the in-house LED screen - a nice detail in the background of key shots.

Edited reels were piped through custom 'glitch' software with loads of shaders written to match the client's mood boards of a black and white, grainy, crushed and contrasty look with all kinds of analog and digital glitches timed just so to emphasize key moments in the action.

From the 'glitch station', we pushed finished assets up to our custom web services that created a styled microsite for each video, sent the player a text with a short-link, and delivered them a copy of their reel attached to an email for easy sharing from iOS to Instagram (an important edge case).

The system ran like clockwork, and the finished assets exceeded expectations by all accounts, in no small part to Ed David's exceptional DP work. Check the vids on this page to see some of our favorites of the 130 cuts we delivered over 3 days of madcap shooting and editing.

Huge thanks to the amazing client, Set Creative, for the vision and trust to go big. Special thanks to Adam McClelland and the post crew for the overtime assist and for fitting it all in.

Production Company: Hard Work Party

for Set, Adidas, and Footlocker

Technical Direction, Systems Engineering, Software Development and Realtime Processing: Noah Norman

Backend Systems and Server Architecture: Chuck Reina

Postproduction Supervisor: Adam McClelland

Editors: Andrea Cruz, Shaheen Nazerali

Director of Photography: Ed David

Producer: Zack Tupper

Camera Operators: John Meese, Andrew McMullen, John Larson

1st AC: John Larson

DIT: Justin Hartough

Gaffer: TJ Alston

Electrician: Jordan Bell

Key Grip: Alexa Hiramitsu

Best Boy Grip: Brandon Barron

Production Assistants: Kyle Smith, Kyle Ostrom

Creative Direction and Additional Animations: Set Creative

December 2016

GIPHY / TIMEFRAME (2017)

To celebrate the 30th anniversary of the GIF, and to celebrate Giphy's ongoing to commitment to supporting the arts, in particular their own flavor of neon-tinged, devil-may-care, retro-inflected, minimally-antialiased net-art, Giphy put together their second Manhattan gallery show in the form of TIME_FRAME.

For this event, taking direction from the design minds of our friends at Dark Igloo, we helped stand up a variety of installations and design approaches while making the tech disappear into a seamless whole, putting the art front and center, as it should be.

Photos by Walter Wlodarczyk via Giphy

Artists’ works, from top to bottom:

Alan Resnick

Robert Beatty

Dark Igloo

Withering Systems

Olia Lialina and Mike Tyka

Nitemind

Dark Igloo

Dark Igloo

ASPECTS of SPUN POLYHEDRA: PLATONICS (2015)

This piece is part of an exploration of my ongoing obsession with visual artifacts — specifically, in this case, the wagon-wheel effect.

While relatively simple to create, documenting a 'clean' performance of this piece presented some interesting challenges, including tearing, gamma, compression, and transcoding issues, all due to the tight, drastic changes in lighting dynamics. The most salient compromise - banding related to JPEG compression - is prominent.

More interesting, though, were issues related to the nature of this recording as documentation of a live performance. Keep an eye on the news section of this site for a future writeup.

Viewing in 60FPS in a darkened space with headphones turned up loud strongly recommended. The usual, really.

****Straight-up does not work on mobile. Requires 60FPS playback or you will be confused.****

****WARNING: This video may potentially trigger seizures for people with photosensitive epilepsy.****

Procedurally-generated models from Polyhedra Database used with permission by Felix Larreta.

Made in TouchDesigner.

MACY'S / NAUGHTY or NICE O' METER (2016)

In case you didn't know, Standard Transmission are the wizards behind the colorful, animatronic, interactive Macy's window displays that draw crowds of hundreds of thousands to their iconic Herald Square location during the holidays.

When they asked us to help with this Naughty or Nice o' Meter, patterned after the classic 'love tester' arcade games, we were excited just for the opportunity to visit Santa's workshop and watch the holiday sausage made.

For this build, we made a simple Python application that drives a series of relays based on interaction with a touch film placed on the glass. It's simple, but the trick was to make the system tunable so we could get the interaction just right as crowds of literally hundreds of thousands used it over the months it was up. Did we say hundreds of thousands?

Did you see it? Were you naughty??

HISTORY OF VIACOM (2016)

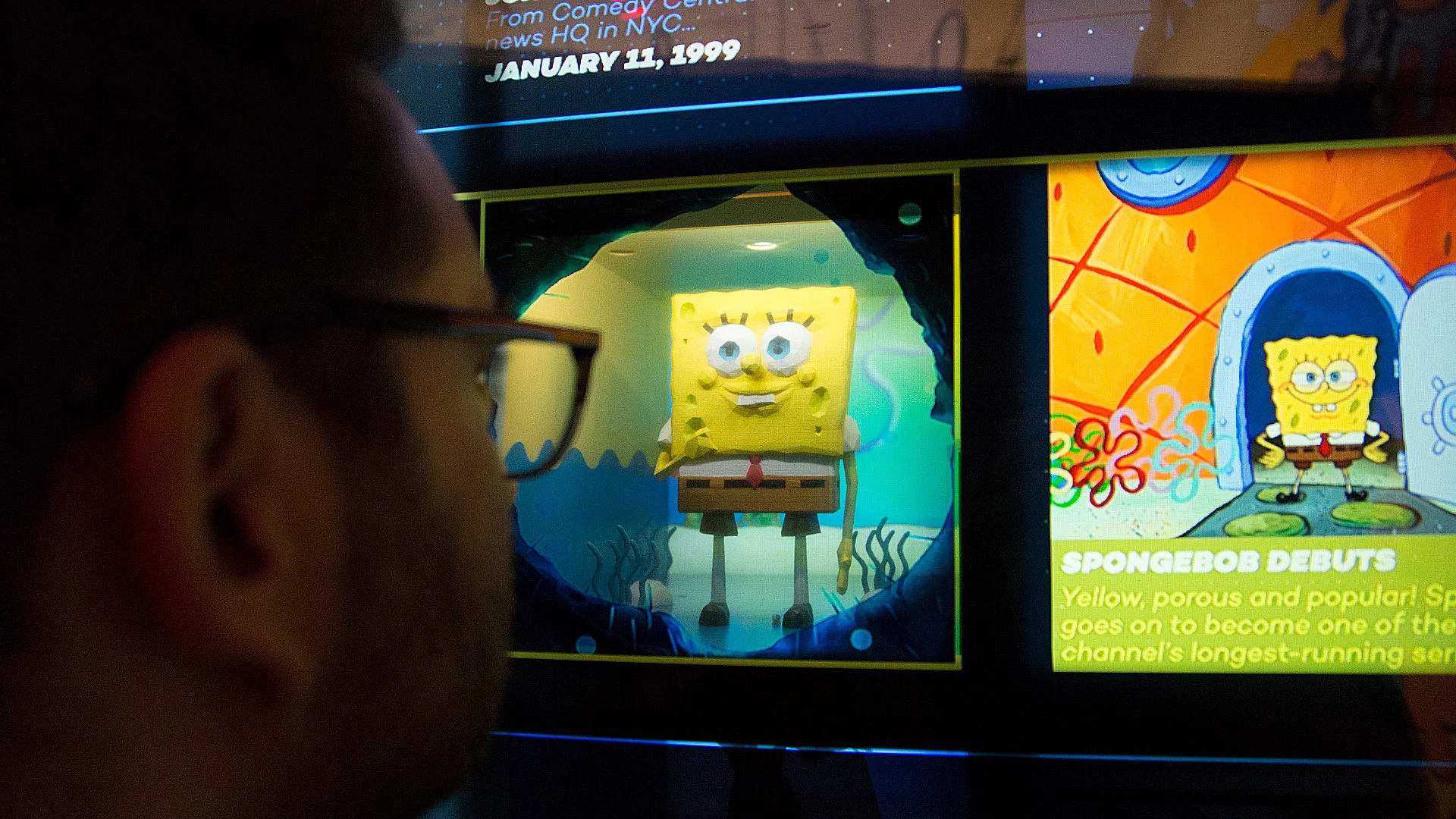

Viacom's Times Square headquarters serves many roles — as office space, production studios, a tourist destination, and as a cultural organ - a place where thousands of employees and creators from the company's diverse networks come together and share a common vision and history.

Viacom's internal production studio, Catalyst, came to Hard Work Party and our strategic partners, Slanted Studios, with a need for communicating Viacom's rich history to new hires and VIP visitors. They had a large wall space in their soon-to-be-built visitors' center, a treasure trove of footage spanning decades, and access to Viacom's networks' many properties.

At the time, Catalyst knew we had a thing for transparent LCDs, and so of course they made their way into the design. This piece features a custom-made 84" selectively-transparent LCD enclosure with integrated lighting and a 10-point touchscreen.

The enclosure houses a series of modular dioramas, each of which has its own software-controlled lighting and mise en scène including lots of 3D-printed characters highlighting key moments from Viacom's past. User interaction with the touchscreen reveals the dioramas behind the opaque screen and triggers scene-specific lighting, giving a feeling of mystery to the box interior.

The system was designed from the ground up to take advantage of Catalyst's in-house animation capabilities — lighting, screen graphics, and dioramas are modular and can but updated on the fly. Plus the box doubles as a tanning booth!

Slanted Studios & Hard Work Party

Executive Creative Director: Michelle Higa Fox

Technical Director: Noah Norman

Producer: Jennifer Vance

Programming: Beau Burroughs, Mary Franck

Production Assistants: Ana Kim, John Hughes

Digital Fabrication: Gamma.NYC

Fabrication Designer: Marcus Swagger

LED and Wiring Specialist: Aaron Lobdell

Transport/Art Handling: Crozier Fine Arts

Viacom Catalyst: Branding & Strategy

SVP, Brand Strategy & Creative: Cheryl Family

VP/Executive Producer: Matt Herron

VP of Brand Strategy: Tori Turner

VP of Design: John Farrar

Director: Matt Hanson

Art Director: Sean McClintock

Animation Lead: Josh Lindo

Animation: Ross McCampbell, James Zanoni

3D Modeling: Scott Denton, Sean McClintock, Michael Berger, Ryan Kittleson, Casey Reuter, Tom Cushwa, Scott Hubbard

Fabrication: Elise Ferguson, Tim McDonald, James Zanoni, Benjamin Kress

3D Printing: Shapeways, New Lab, 3d Hubs, NRI

R&D Design & Animation: Carl Burton

R&D Prototyping: Michael Boczon

Video Editor: Jared Smith, Alex Zagey

Archival Footage Research: Jason Yorke

Copywriter: Tanya Davis Copy

Editor: Tory Mast Research: Jen Li

Case Study

DP: Chris Willmore

Music: Ketsa "Within the Earth"

August 2016

GLASSLANDS (2013)

Glasslands Gallery is not just a music venue - it's our favorite place to see a show in NYC. It's also space that's been known for the installation art and idiosyncrasies that set the tone and give the room a DIY loft feel.

Our installation at Glasslands is a distributed, modular design that uses common mailer tubes (treated for flame retardancy) to house over 300 RGB LEDs, individually addressable, passed through diffusion, and mounted in clusters about the venue.

In an attempt to speak to the warm, intimate feel of the space, the design deliberately destabilizes the stage / house dichotomy by spreading from the upstage wall all the way to the entrance of the venue. Because each tube is individually addressable, the system can can highlight the three downstage 'chandeliers' over the performers' heads, or the cluster over the house DJ position, even individual tubes peeking into the bathroom stalls, or can treat the whole room as one large canvas, passing shapes through the point-space like spotlights or stripes.

Driving the system is our custom software, refined through over a year of development and onsite testing. It's a dynamic set of high-level controls that allows house sound engineer (GL has no full time lighting guy) to direct the software based on the same subjective criteria used to describe music, or the feel of an interior, offering direction like 'darker, cooler colors, dimmer in the house, slower moving', freeing up the venue crew to focus on the task at hand while providing adjustable, appropriate, and dynamic looks with minimal intervention, whether through the onscreen interface and built-in LFOs, MIDI controls, or OSC over wireless.

AM owes huge thanks to the Glasslands owners Rami Haykal and Jake Rosenthal, staff Josh Thiel and Cameron Hulk, plus Eileen Tang, Trevor Hufnagel, Francisco Casablanca, Guillermo Echevarria, Dark Momino, Chuck Reina, and many more who gave countless hours to project, strictly on faith and love of tubes.

Made in Max.

Lighting Engineering: Jason Fellows

Avery Tare photos courtesy of Jason Bergman

Grid photo courtesy of Eileen Tang

Tubesoft uses the very awesome imp.dmx ArtNet library by David Butler.

NOTHIN' BUT 'NET (2017)

Nothin' But 'Net is v1 of a GIF-driven basketball hoop game, conceived and commissioned by Dark Igloo for GIPHY, with guts by Hard Work Party and body by Rob Ebeltoft.

The machine loads a series of GIFs via GIPHY's API and switches GIFs when you make a bucket. Super simple and super addictive.

The guts are a Raspberry Pi equipped with an IR beam-break sensor which is polled with synchronous modulation (because fluorescents) through an ADS1115 ADC. The Pi hosts a webserver that serves up the HTML display page, which can run on any device on the same network (in this case a Mac Mini) and gets its commands via websocket from the Pi sensing daemon.

The device also hosts an admin page that lets users change the search query used to load GIFs, which is a bit of an easter egg after somebody has been playing it for a while.

We're already exploding with ideas for v2 ... this thing is a slam dunk.

Images courtesy of Dark Igloo.

RED BULL MUSIC ACADEMY (2013)

Aside from the citywide festivals, the radio broadcasts, and the daily newspaper, a large part of RBMA's visible output is in the form of its lecture series.

For RBMA's 2013 NYC academy, their journalist and musician interviewers conducted informal talks with some 50 luminaries from all aspects of music production, arrangement, and performance, notably including Philip Glass, James Murphy, Lee 'Scratch' Perry, Nigel Godrich, Brian Eno, Debbie Harry, Giorgio Moroder, Q-Tip, El-P, Van Dyke Parks, ?uestlove, and Rakim. Followup questions after each talk came from RBMA participants, studio assistants, and staff, among whom were Flying Lotus, Four Tet, Just Blaze, Thundercat, Throwing Snow, and Koreless.

For the series, alongside epic documentarians and old friends M ss ng P eces, Hard Work Party designed and ran a 3-camera studio, audio system, and DSP components, directing the crew to create the lecture films hosted on the RBMA site.

During the planning and buildout phases of the space, we worked with Inaba architects , systems integrators, automation engineers, and RBMA's in-house studio, radio, and media teams to advise on issues of design as they pertain to future uses for shoots and performance.

Pictured, from top to bottom:

RBMA founder Torsten Schmidt talking to Brian Eno pre-interview

Flying Lotus interviews Nigel Godrich

Debbie Harry and Chris Stein on the couch

Lee 'Scratch' Perry, the Upsetter, Godfather of Dub

Q-Tip

VANITY FAIR / BMW (2011)

For presentation at Vanity Fair's Fashion in Film Festival, BMW commissioned the production of four short films collectively titled 'Spotlight on Innovation'. The bios focused on innovators from fields like urban planning, exploration, and fashion, and VF wanted them presented in a form that spoke to the theme.

Hard Work Party, working here with Industria Creative, knew it had to fit in with museum surroundings - clean, built-in, and permanent. The function had to be simple and robust enough to withstand constant use by the public, unmonitored for 3 days.

For the physical display component, we built a rear-projection system housed in a custom cabinet - a single projector throwing what looked like 5 separate channels of content onto floating panels of custom-cut rear projection plexiglass.

For interactive playback control, we wrote an iOS app and embedded the iPad into a purpose-built kiosk.

Control messages originating at the kiosk made their way to our purpose-made software playback system to allow on-the-fly adjustment of video geometry and timing, making it possible to fine tune the placement of images on the floating panels throughout the settling process of the housing cabinet.

The result was kind of magic. Check the shaky-cam video to get the gag.

Thumbnail image courtesy of Owen Hope for Industria Creative