For Music HackSpace’s TouchDesigner meetup about Callbacks and Extensions, Noah, Hard Work Party chief, gave a quickfire talk alongside Plusplusone’s Wieland Hilker and Derivative’s own Python 'police’, Ivan DelSol.

Noah’s talk focused on the why and how of validation in data-handling in general and how the Python library Pydantic makes it easy. Noah’s portion of the event begins about an hour in but the whole thing is well worth a watch!

Thanks to Music HackSpace for hosting such a great event!

DEEP DIVE: How I Build TouchDesigner Apps in 2025

Just what it says on the tin!

For Third Wave’s YouTube channel, Noah explains how he builds TouchDesigner apps in 2025, including actionable advice on using Python extensions, Finite State Machines, and as few nodes as possible, emphasizing legibility, maintainability, and setting code up for collaboration with other developers.

Praise for ‘How I Build TouchDesigner Apps in 2025’:

“Must be one of the best tuts i've seen in a long time”

”this background music makes me feel schitzo”

”My fav content creator!”

”you got me inspired”

”No I don't code like this - yes, I will start doing so!”

TALK: Gradient Descent @ TouchDesigner Philly

For the first TouchDesigner Philly meetup, Hard Work Party Chief Noah Norman kicked things off with a talk about a core challenge in Hard Work Party’s process:

When we're working with new media, when anything, including complex systems, architecture, electronics, software, and emergent behavior, can be your medium, how do you iterate in the creative process?

How do we navigate through the hyper-parameter space of possibilities when a change in the design, or the medium, or the technique, or the positioning, or the framing, etc., utterly changes the effect of the result?

This talk is about that process, and, more constructively, about techniques for getting out of 'local minima' - getting unstuck when it seems like you've run out of options when designing in new media.

INTERVIEW: Noah Norman on Epoch Optimizer for Derivative

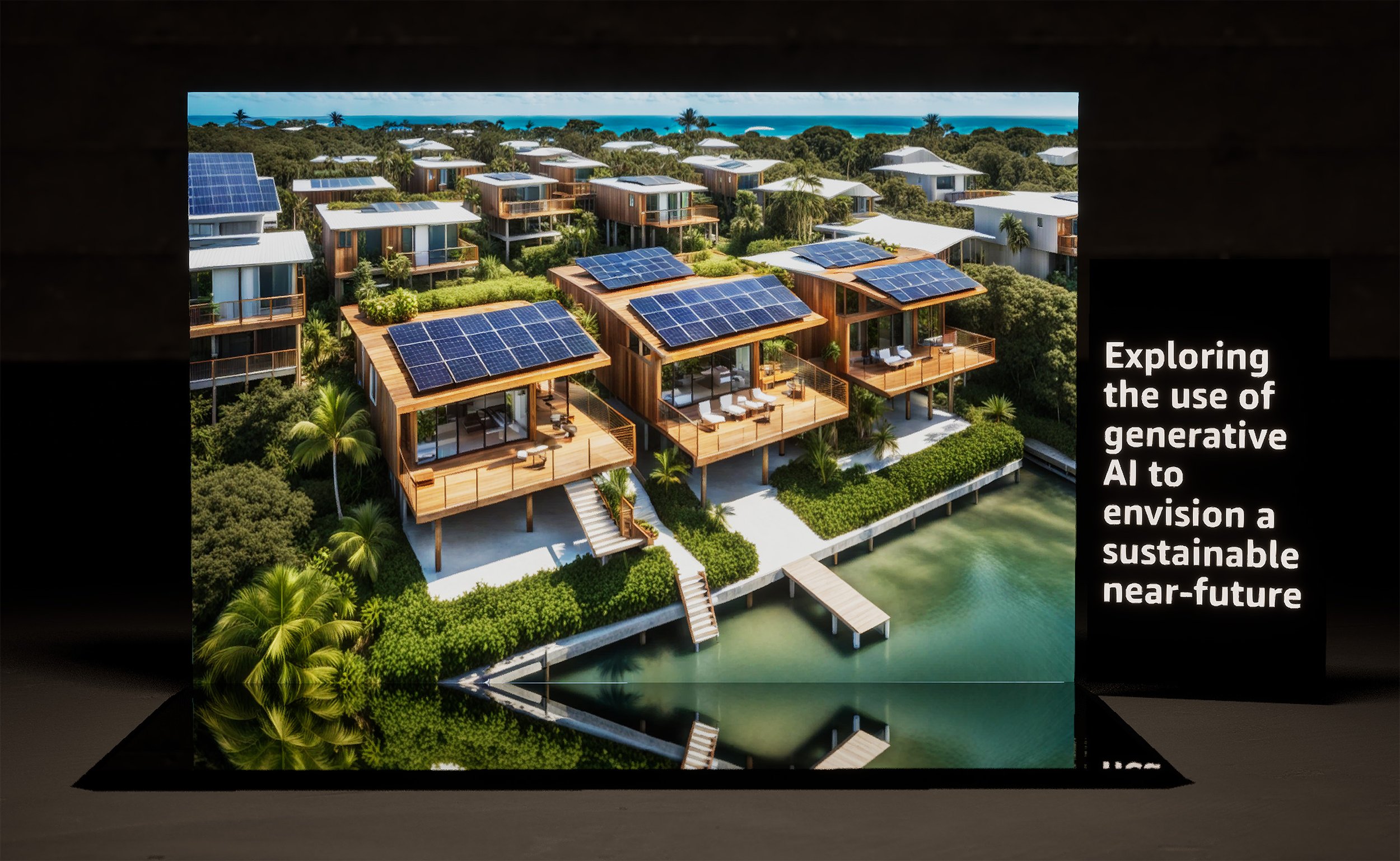

Derivative, makers of TouchDesigner, have just posted a long-form interview with Noah Norman, Chief of Hard Work Party, about Epoch Optimizer, our installation for AWS at the 2023 re:Invent conference Sustainability Summit.

This interview goes deep on the efforts to ‘rhyme’ the form and function of this piece as an exploration of the use of generative AI to envision a more sustainable alternate present.

In addition to the design and theme considerations, we discuss some of the technical aspects involved in the realization of the work, including command and control of real-time inference from TouchDesigner as a scripting and presentation layer.

Read the interview on Derivative’s site here.

VIDEO: Making Games in TouchDesigner?

TouchDesigner is the best tool out there for new media installations, experiments, visual art explorations, large-scale interactive systems, etc. etc., but can it be used to make games? What are the pros and cons? For Third Wave, Noah gets into it in this exhaustively-produced video. Exhausting to make, not to watch!

In addition to a deep dive on the ins and outs of using TouchDesigner to make games, we also talk to Simon Alexander-Adams, aka polyhop, about his experiences building games in TouchDesigner.

GENERATIVE AI in PRODUCTION

Prototype interior images generated for a home decor client. Generated from a textual embedding derived from client-supplied training material.

As with any hot technology, we get a lot of inbound requests from prospective clients looking to deploy generative AI in production. As is often the case with these situations, we’re finding that clients’ expectations of the capabilities of the system don’t align with the reality of their behavior in production.

We saw this dynamic in the past with architectural projection mapping, which photographs well from the vantage point of the projector, but can be underwhelming when seen in person, off-axis, with stereo vision, unretouched, where the projected light is fighting with ambient spill. Trying to communicate these shortcomings in advance is often met with pushback in the form of … more photos and video, which of course look great.

Today’s expectations of the capabilities of generative AI are calibrated by exposure to edited (some might say cherry-picked) samples of LLM and text-to-image outputs. I’m not trying to make a case that these systems can’t produce excellent outputs from time to time, but rather that they only do so infrequently, and that systems that expose end users to raw outputs without a curation step in the middle are likely to under-perform expectations at best, and cause a fiasco at worst.

At issue is, in part, the tendency to center the AI-generatedness of the product in its presentation, which (perversely, IMO), raises expectations in the viewer, as they have in the past likely seen some impressive AI-generated outputs.

Contributing to this is the spectacle that accompanies marketing initiatives, further raising expectations of the output quality.

As with most distributions, of course, the best stuff - the stuff that gets passed around - is under the top tail of the bell curve, and the majority - say, 85% - of the outputs, strike the viewer as anywhere from less-great-than-other-AI-stuff-they’ve-seen all the way down to objectively awful.

Empirical Rule by Melikamp, modified by me, CC BY-SA 4.0

Especially in the world of AI-generated images, the average viewer’s level is often set by the impressive albeit all-too-recognizable and HDR’d-all-to-hell output of Midjourney. Midjourney makes aesthetically impactful images with an incredible hit rate (very few duds, munged images, deformed bodies, etc), but Midjourney is made of secret sauce and doesn’t have an API at time of this writing. That means its influence on AI image-making in real production acts exclusively to raise the bar in a way that leads to unrealistic expectations.

All of this is to say, at the moment, if you want to present the top X% of AI-generated output, you have to design your experience / system / product to accommodate an editing or curation step that removes the other (100-X)% - curation done either by the end user or by a wizard behind the curtain.

When clients push back on this idea I find myself explaining that, in service of the (often short-lived, often promotional) project they want to undertake, they’re proposing that we improve on the the state of the art products of VC-backed giants Stability and OpenAI.

This task raises a semi-rhetorical question I sometimes ask people with ambitious ideas for small projects — ‘given how much it will take to do that, it will be pretty valuable if we pull it off. Is this something you want to spin off into a startup?’

With that said, there are strategies for hiding curation and for improving the hit rate of these generative systems, and even turning the constraint of a low hit rate into a feature that contributes to an experience.

We’ve learned a lot in the last 2 years about deploying generative AI in production, and below I’ll use three recent projects to discuss how we’ve approached this issue.

HIDDEN HUMAN in the LOOP: ENTROPY

Entropy was a piece I made with Chuck Reina beginning in 2021, using then-SOTA VQGAN+CLIP and GPT-3 in a feedback loop to create what presents as a (unhinged) collectible card game.

We truthfully presented the work as the result of repeatedly prompting these two AI systems with the previous-generations’ outputs, but what we didn’t highlight was that the process involved an EXTREMELY LABOR-INTENSIVE curation step.

I personally selected among four options for every field on every card before generating the next generation, which doesn’t sound that bad until you realize that 3,000 cards * 7 fields * 4 options = 84,000 curation steps, not including the first two times I generated the first 10 generations of the project before restarting for various reasons.

To get this done, Chuck built a web-based, keyboard-driven review tool that I used for literally 50 days straight until I had carpal tunnel in both hands.

Had we not included this hidden hand, the recursive AI outputs would have quickly devolved into sophomoric jokes, pop culture references, or regurgitation of ideas from other places.

Many of you never used GPT-3 - in fact, many of you never heard of it, for good reason. It was less good than the GPTs we use today. Similarly, VQGAN+CLIP, while revolutionary, was the last of the GANs used in any major way. Diffusion models are a big step up.

If you want to read more about the process and learnings that came from this project, check out this thoughtful writeup/interview in nftnow.

CURATION as EXPERIENCE: CHEESE

Just a small handful of the stuff on my Cheese profile page, which I encourage you to peruse.

The first time I saw Dreambooth in action it was like being hit by a bolt of lightning. I instantly envisioned a social network that resembled Instagram but was entirely made of AI-generated images.

I immediately teamed up with a crew to make a prototype: a social network called Cheese. Users entered prompts with the keyword ‘me’ to identify themselves, posted what they liked, and hit ‘do me’ on public images of others to get a remixed version of that prompt with themselves in it.

It was, to put it mildly, addictive, sticky, hilarious, and gonzo.

What it wasn’t was magic. It produced plenty of deformed, unrecognizable, blurry, munged, off-prompt, confusing, upsetting, and disappointing images.

But Cheese was designed to be a paid service - users buy tokens, and they spend those tokens to generate - effectively to purchase - images. From interviewing users, I identified early on that one of our most important KPIs, aside from how many users we got through onboarding, or MAUs, or CAC, or ROAS, or whatever, was going to be our hit rate.

That number - what percentage of the images generated are images that a user likes - that they would post, share, download, etc. - was a major contributor to the average user’s perception of the quality of the experience, and we found that users’ initial expectations of what that number should be varied wildly, but was generally higher than we could satisfy.

We expended a ton of effort, especially for a prototype (unsurprising if you know us), in bringing that hit rate up. We ran loads of experiments on training, data preparation, inference, prompt injection, and onboarding, and internally discussed dozens of additional approaches as we saw them bubble up in papers. Some of them moved the needle, but we saw (rightly, as time has borne out) that publicly-available approaches in the space wouldn’t do much to change the balance in the coming year-plus.

With that in mind, we did what we always do: we embraced the constraints. We designed the Cheese UX with user curation at the center of the loop.

We designed a system where each prompt generated 9 variations that were only visible to the user in a private ‘camera roll’, from which they could choose to make any, or none, of the images public, only then posting them to their profile and making them visible in the public feed.

Because the users weren’t using a real camera but rather entering a prompt or hitting ‘do me’ and waiting for the results to compute, the private tab became more like a slot machine - a place our hooked users repeatedly refreshed, anticipating the dopamine hit from the variable reward of a batch of unfathomably weird, surprising, and hilarious images.

We found that this dynamic evoked how users behaved in other media, where some posted loads of their outputs, some edited heavily and posted only the very best, and some posted almost never and rather used their generations for sending privately to friends.

If we could, would we engineer a system where 100% of the images produced were ‘hits’? Absolutely. Failing that, our users were very happy with where we arrived, putting the control in their hands and making curation part of the experience.

CONSTRAIN and BATCH: INTERIORS

Some prototype images from an ongoing project to generate interior images in a very specific style.

These 9 were cherry-picked, you could say, out of a batch of 64.

We’re currently engaged an ongoing project helping a client in the decor space produce photo-realistic AI images with a very particular aesthetic. They’re attempting to use AI to reduce their costs in staging and photographing product for small-screen promotional efforts, eg in social media and in digital advertising.

Because this client has been producing photos and renderings in this style for years, the surfeit of training material meant fine-tuning of some sort was an option for capturing their look. Through some experimentation, we found a textual inversion, rather than LoRA or Dreambooth, to provide an effective and easy-to-use way to constrain generations to the style of the training material.

With that said, even a fine-tuned Stable Diffusion has issues with counting, lighting, and realism - issues exacerbated by the training material, which included loads of unconventional furnishings like amorphous couches, improbably cantilevered chairs, and side tables of indeterminate shape and material. The fine-tuned output, while instantly on-vibe, still had a low hit rate and still produced stools with 2 legs, confusing shadows, and side tables with too many surfaces.

To counteract this tendency, we found using ControlNet to be an effective way to ‘lock’ the composition of an image and iterate on its contents in a way that produces variations within a narrow range of blocking options but with different mise en scene.

That approach, combined with plain old batching - generating dozens, hundreds, or thousands of images at a go for human curation before presentation - resulted in a system that, while still batting something like .130, produces loads of high-quality, usable images at once for minimal human effort, a huge win for the client over the system they were using before, which involved unsustainable cost and supervision for the nebulous ROI of social media grist.

LASTLY

The last trick up our sleeve - compositing / collage / outpainting. De-emphasizing the importance of any given image by including it in a larger composition can make incoherent individual generations acceptable as a contribution to a surreal gestalt. Just ask Hieronymus Bosch!

We deployed this technique to great effect in the continuous-transformation inpainting process employed in Epoch Optimizer, our durational generative installation for AWS at their re:Invent conference in ‘24. Check out the case study at that link, or read our in-depth interview on the making of Epoch Optimizer with TouchDesigner makers Derivative at their site.

![AWS / Epoch Optimizer direct capture [2023]](https://images.squarespace-cdn.com/content/v1/538c81d0e4b0573d1379a8dc/1700666435506-RK5DJYCD7T73Q8QV4ZUA/image-asset.jpeg)

The Garden of Earthly Delights, Hieronymus Bosch, c1500

Want to explore using generative AI in production? YOU KNOW WHERE TO FIND US! (here)

Mass Interaction Design: One to Many to One

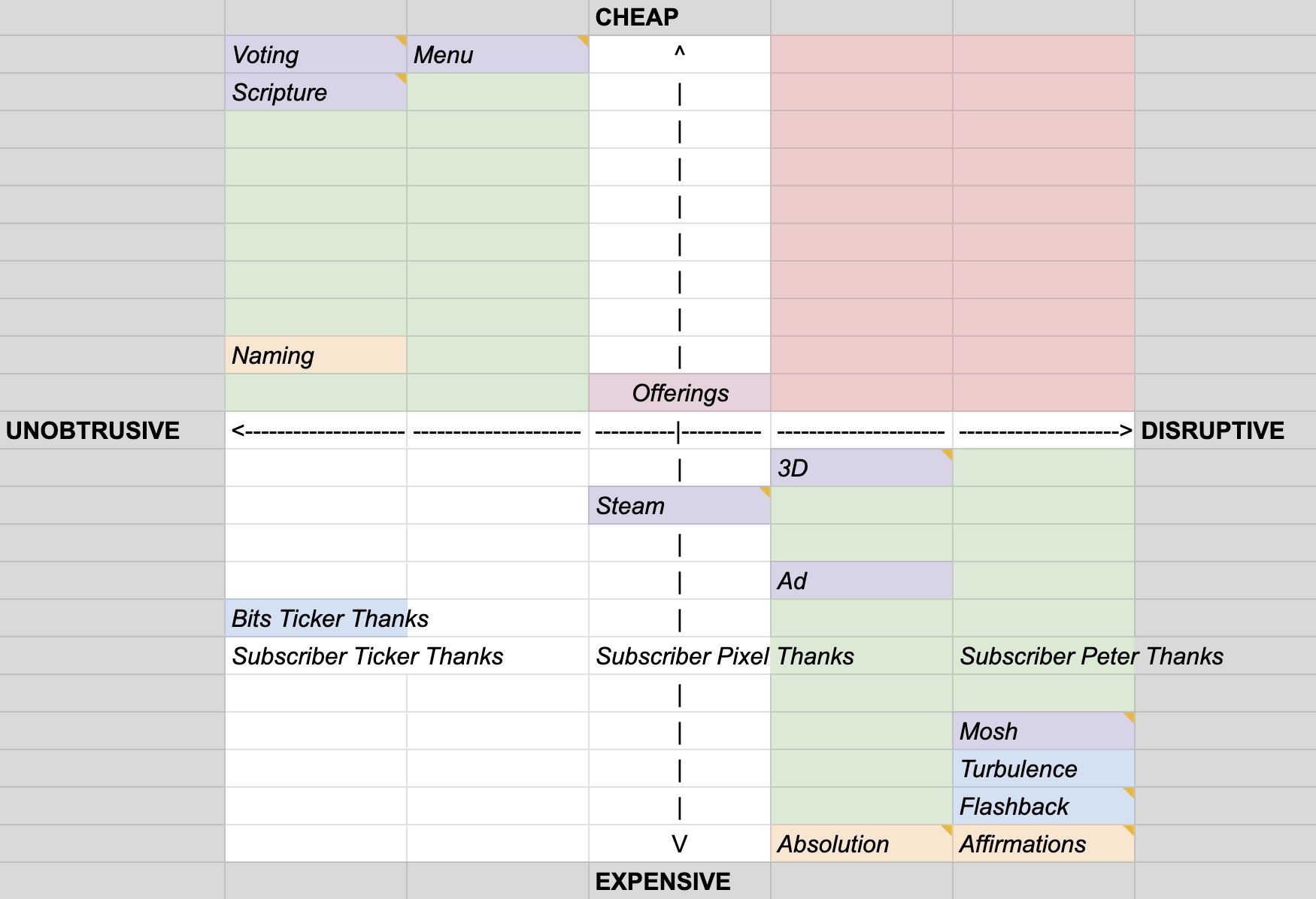

How we engineered the user experience design of Elsewhere Sound Space to maximize interaction opportunities for a crowd of thousands while still running a halfway-coherent show.

When a crowd of 10,000 people yell at the same time, does it matter what any of them say?

How can an audience that big contribute to a conversation?

What does it mean to be 'interactive' when the group pushing the buttons are so many?

As creators of both physical installations and web-based experiences, we're used to thinking about either interaction for meatspace users in the dozens, or realtime, often streaming, software experiences that reach viewers in their tens of thousands ... but the idea of combining the two in an interactive experience for a mass audience was new territory.

The context in which we were thinking about this was 2021, mid-pandemic, when we were working with Twitch and beloved Brooklyn music institution Elsewhere to find ways for homebound audiences, hungry for the community and togetherness of live music, to connect.

At the time, virtual production-powered concerts were everywhere; virtual festivals were taking place in browser windows, well-known musicians were represented in machinima extravaganzas in Fortnite, and even simple livestreams felt especially poignant and special.

But none of these approaches offered a real sense that the show you were watching was different because you - one specific, dedicated fan - were there. It's not really possible to feel seen in a traditional one-to-many streaming audience. That’s a broadcast.

And while meaningful interaction could be a great thing to offer a huge crowd, if every fan gets to come up and touch Rod Stewart's hair, he's either not going to get a lot of singing done or there can't be all that many fans doing the touching.

If we tap the glass, we want the fish to look at us, then go back to doing fish stuff.

It was from this perspective that we began thinking about what's possible with bidirectional communication in a live performance when the show is led by its tech team.

[The result was Elsewhere Sound Space <— check that link if you just want to read about the show - this post is about the interaction design behind the show.]

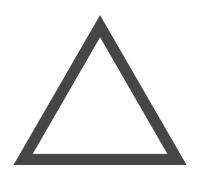

The Disruption Pricing Principle: A Framework for Mass Interaction

This chart and the axes it posits are the main idea behind this post. Don’t worry about the specific interactions plotted here - they’re explained below. Just pay attention to the axes and the implications of the various regions of the space described.

The framework we came up with plots viewer-initiated interactions on axes from 'cheap' (easy to trigger) to 'expensive', and on the other axis, from unobtrusive to disruptive.

The theory is simple: viewers get a thrill from being a part of the show, and that thrill is proportional to how much influence their action has on the events taking place onscreen. Constraining this is the idea that the amount of this excitement available to viewers is finite - the more presence in aggregate viewers have in the developments onscreen, the more the show becomes about viewer involvement, and the less value any viewer interaction has.

Shows that index heavily in this direction can be hilarious and thrilling and groundbreaking in their own right, but they feel more like a video game, where the audience is almost puppeting the talent onscreen, and in that context, getting a response from interaction is so expected as to feel almost mundane once you get used to it. Keeping this tension in balance — optimizing the utility of viewer interactions while still having the show be about something else — is the mechanic we’re investigating.

In order to ‘price’ interactions appropriately, actions that are especially disruptive to the show should be challenging to effect, whereas actions that have little to no effect on the show shouldn't take a lot of effort or money. Things that live outside of those two quadrants of the chart will either be too easy to abuse and thus frequently disruptive to the show (cheap and disruptive - eg hecklers) or disappointing for the viewer (expensive and underwhelming - eg VIP tickets for a 1-second meet and greet).

We should clarify here that while 'disruptive' is generally a negative term, in our case the show was intended to be a gonzo experience thematically, aesthetically, and technically, and show-derailing interactions from the audience, while disruptive to the furthering of the gonzo plot, can be hugely fun and are crucial in fostering the giddy feeling that the onscreen talent might look into the camera and speak directly to you at any moment.

That’s that ‘tap the fishbowl’ feeling we were after, but the effect diminishes with the frequency with which it’s seen.

We want the fourth wall there so when we break it, it counts.

In the interest of making it so as many audience members as possible can have a hand in initiating these kind of rewarding interactions, we looked to offer a blend of options that felt to viewers like they could participate in any fashion from casually, as a crowd member, to aggressively, as a super-fan, much as they would in a rowdy live audience.

Let's look at some of the interactions we built, starting small and working our way up:

CHEAP and UNOBTRUSIVE

Chat Interactions

On Twitch, 'the chat' is a character in every show, and it's the main channel through which audiences and on-screen talent interact. It's become a convention in some of the more graphics-heavy Twitch shows to put the actual chat onscreen in order to provide a diegetic explanation for the talent's sightline when reading from an in-studio monitor.

The chat was sometimes literally onscreen.

It's also an easy place to sprinkle in opportunities for viewers to drive unobtrusive interactions, including automated responses to user keyword commands, whether in the open channel or as a DM. We offered a few chat-based bang commands, like '!scripture', which viewers could use to dredge up bits of lore, backstory, and color writing from the enormous database to which we were constantly adding.

Having the voice of the show reinforcing tone in the chat, seemingly in dialogue with viewers, provided high ROI for an ongoing process of populating the response databases with tidbits as they occurred to us.

Time-Limited, Binary Voting

(choose your own adventure)

As a visually rich virtual production improv show, the settings in which we placed our talent were key to driving story and conversation forward — in reality, our talent were standing in separate rooms facing a camera and a whole bunch of monitors, one of which was showing the composited scene, one the chat. To that end, our hosts would often put the choice of a change of scenery to the crowd, triggering a timed vote in which the majority won.

This is our first example in a category of interactions designed to let a large group of people each individually participate in a collective action that has more weight in the proceedings of the show than we'd want any single viewer to have.

Viewers chose the next location for the action.

This interaction was also used to allow the audience to weigh in on subjective decisions that directly affected the plot. In the case of the image below, it was to determine the winner of a key debate for Space President:

A vote to determine the winner of a debate between the challenger and incumbent in the race for Space President.

Onscreen Easter Eggs

Just seeing your name on screen can be rewarding, so we looked for easy ways to make that happen whenever possible. In the case of the Mud Bath scene, viewers who looked around in the “!menu” command or who saw other users doing it would notice that they could type “!steam” in the chat, releasing a burst of steam with their name spelled out in it and raising the temperature on the onscreen thermometer, which slowly fell between bursts. Their username name would rise with the steam and collide with the ceiling and walls of the bath before dissipating.

The rate of this interaction was gated by the temperature in the room. Once over a threshold of some 330K (about 135F), viewers who tried to use this interaction were told by the chat responder-bot that they needed to let the room cool a bit before adding more steam.

Usernames emitting from steam bursts triggered by those users.

Judged Submissions

Each episode included a long-running interaction, promoted at intervals via onscreen text, reminders in the chat, and occasionally by the talent themselves, asking viewers to come up with a new name for the guest in the event that they were indoctrinated into the cult at the end of the episode - another thing that was put to a vote.

Names entered this way (purchased with 'channel points' — more on this below) went into a database that was reviewed off-camera by the show crew, who manually picked a winner for the host to announce at the end of the episode, along with acknowledgement of the viewer who suggested it.

A viewer is acknowledged for contributing the winning cult name for the guest, as judged by the offscreen crew.

Dynamic Credits

We took advantage of the ability to dynamically generate the credits just before they went onscreen to make sure every subscriber and contributor was acknowledged and the top contributors were surfaced too.

Stats about top contributors calculated at credit runtime.

A list of current subscribers, updated just before it went onscreen.

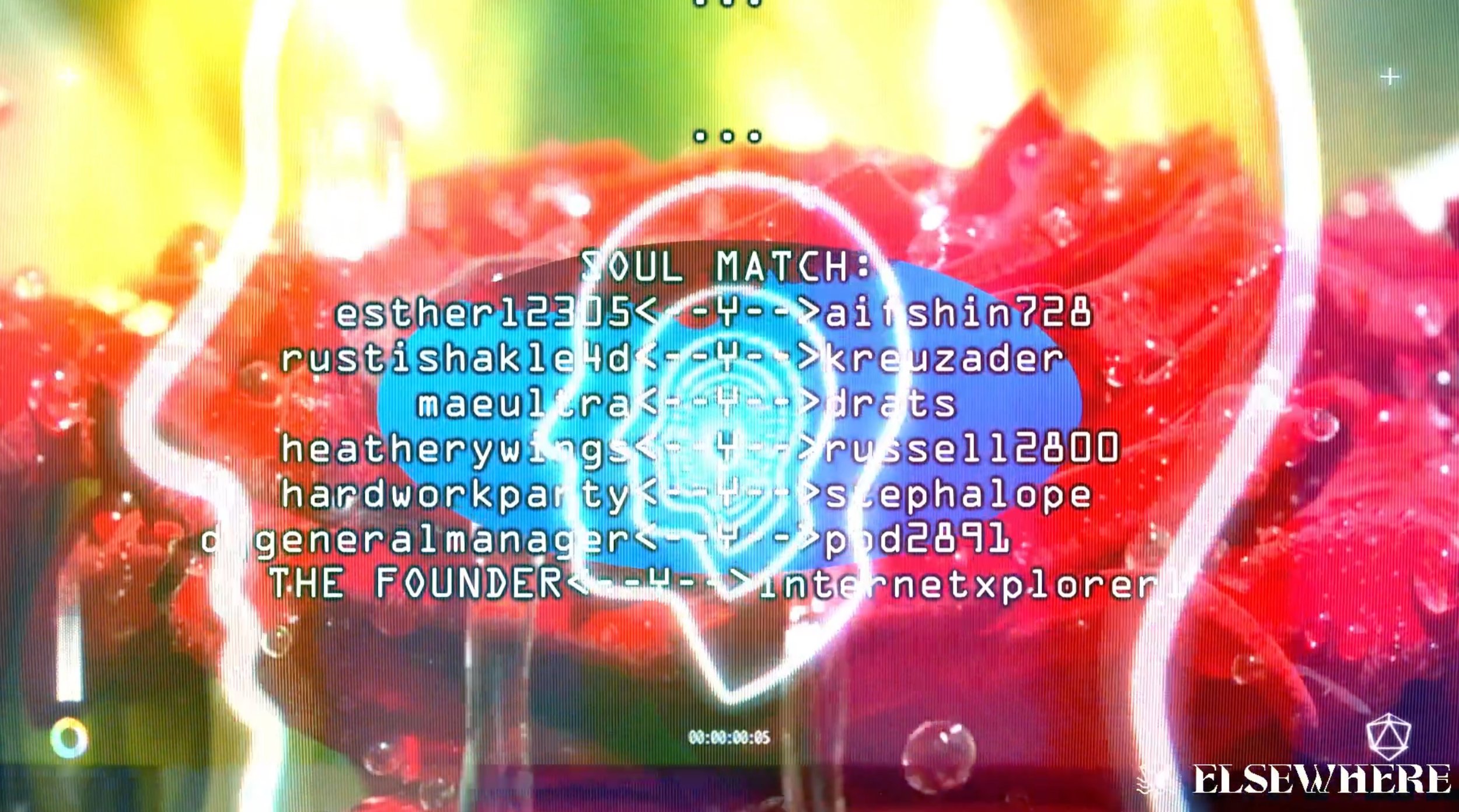

We also hyped a 'Soul Match' gag repeatedly throughout the show, held until the very end of the credits, in which anyone who contributed to the show during the episode was entered for a chance to match with another contributor, or, if they were extremely lucky, with The Founder of the cult - a semi-conscious cadaver who is currently in cryo suspension and only communicates through a receipt printer.

Worshipper internetexplorer matched with THE FOUNDER!!

RIGHT in the MIDDLE

(not too intrusive, not too expensive)

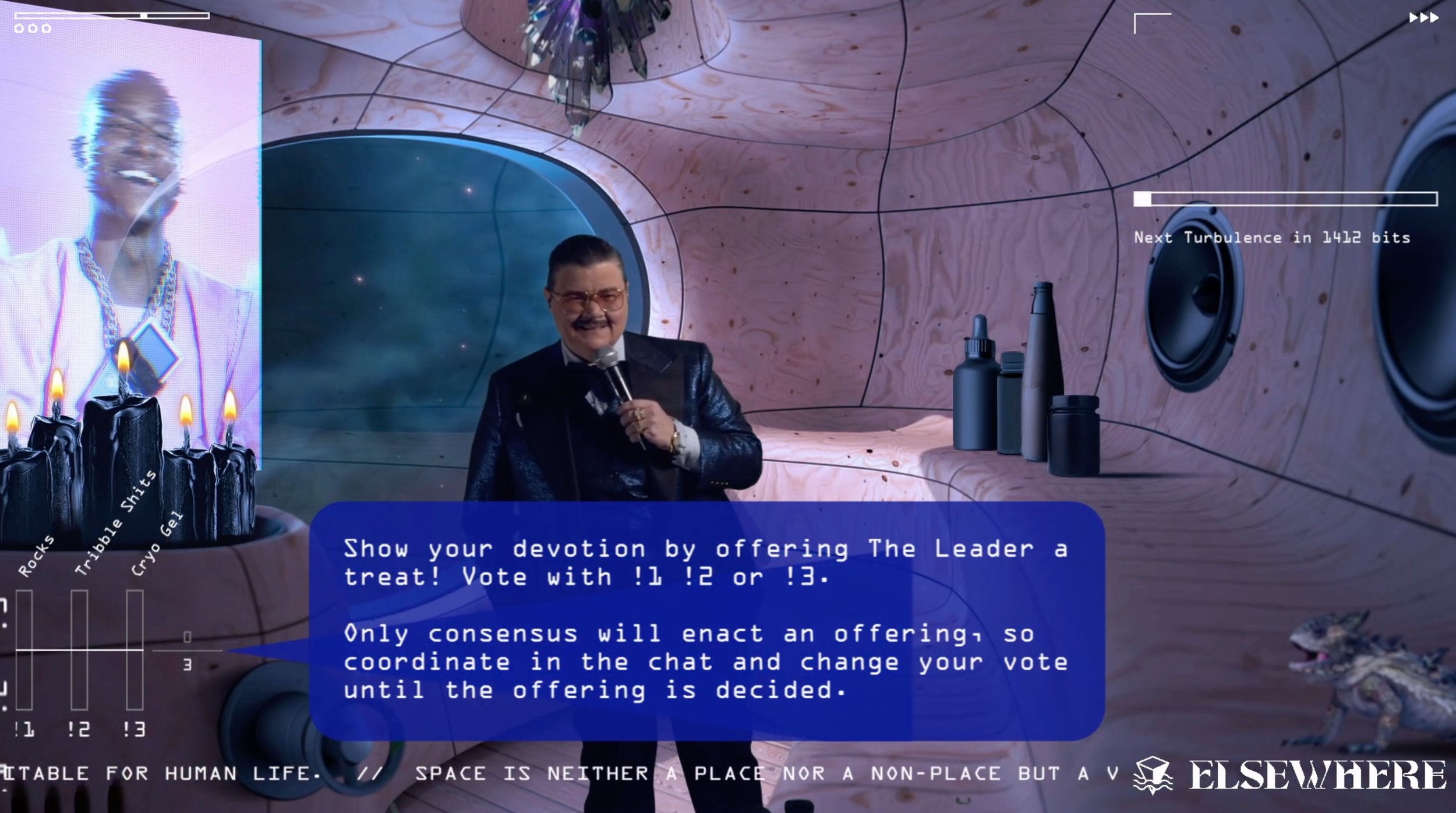

You’d think this category would be where we focused most of our interaction efforts, but most of our ideas were on the more extreme ends of the spectrum. With that said, voting in the ‘offerings’ gag below was likely the most common action taken by any given user.

Parimutuel Voting

(Offerings)

Onscreen tool tip tutorializing the offering mechanism. These kind of tips were crucial to bringing the audience along with the operation of the various interfaces around the show without requiring the Leader to break the fourth wall and confront the interaction mechanism in a way that didn’t feel funny or fun.

As an enticement to spontaneous audience coordination towards a common goal, we implemented a 'parimutuel voting' system, in which viewers could assign and re-assign their vote at any time among three options, but the decision event wasn't triggered until a certain number of total votes was cast, and until a certain percentage of those votes was cast for a specific option. This required some consensus-building.

The worshippers have offered the Leader some Hyper Mist

When these threshold numbers were large, it meant that viewers had to coordinate in the chat to align opinion around a specific (often ridiculous) choice - in this case among sight-gag prop jokes framed as 'offerings' to the Leader. When the threshold was reached, a sound and title package would overwhelm the frame, insisting that the Leader (and the guest, if they were on camera), accept the offering of their followers by enjoying a Pleasure Cylinder, or a Cryo Gel, or some Hyper Mist, or Rocks, or a Dark Secret, etc. Prop comedy ensued.

Ads

An ad for something called ‘Success Tabs’, triggered by viewer stephalope’s tip

A simple way to gate the rate at which viewers take somewhat obtrusive onscreen interactions is to literally price them, in dollars, or, in the Twitch world, in a currency called 'bits', which cost dollars to buy.

The idea of onscreen ads paid for by viewers seemed like a good reward for a nominal contribution of around a dollar, so at any time a viewer could 'buy' an 'ad' for a random product they didn't know in advance, and the ad bubble would appear and float up over the action, featuring some randomly-selected ad copy and their username.

DISRUPTIVE and EXPENSIVE

On the very disruptive and expensive end of the interaction spectrum, we had options in both collaborative action and individual action mechanisms.

Bit Meter: Aggregate Tips

At all times in which the show was open for interaction, which was any time the musical guest wasn't performing or saying something especially personal, there was a meter in the top right of the screen charting the audience's progress towards triggering a disruptive action. The meter was moved along via bit contributions (monetary tips) to the show, and when it filled up, it triggered one of a few actions, usually every 30 minutes or so.

A favorite was the 'Flashback', where the screen would go black and white and wavy (classic flashback effect) and the host would be overcome with an often painful and bizarre improvised memory from the show's backstory.

Next tip of 96 or more bits kicks off the flashback.

“Oh! Ohhhhh!! I’m suddenly remembering my father’s accountant, Sol … god he was a terrible accountant!”

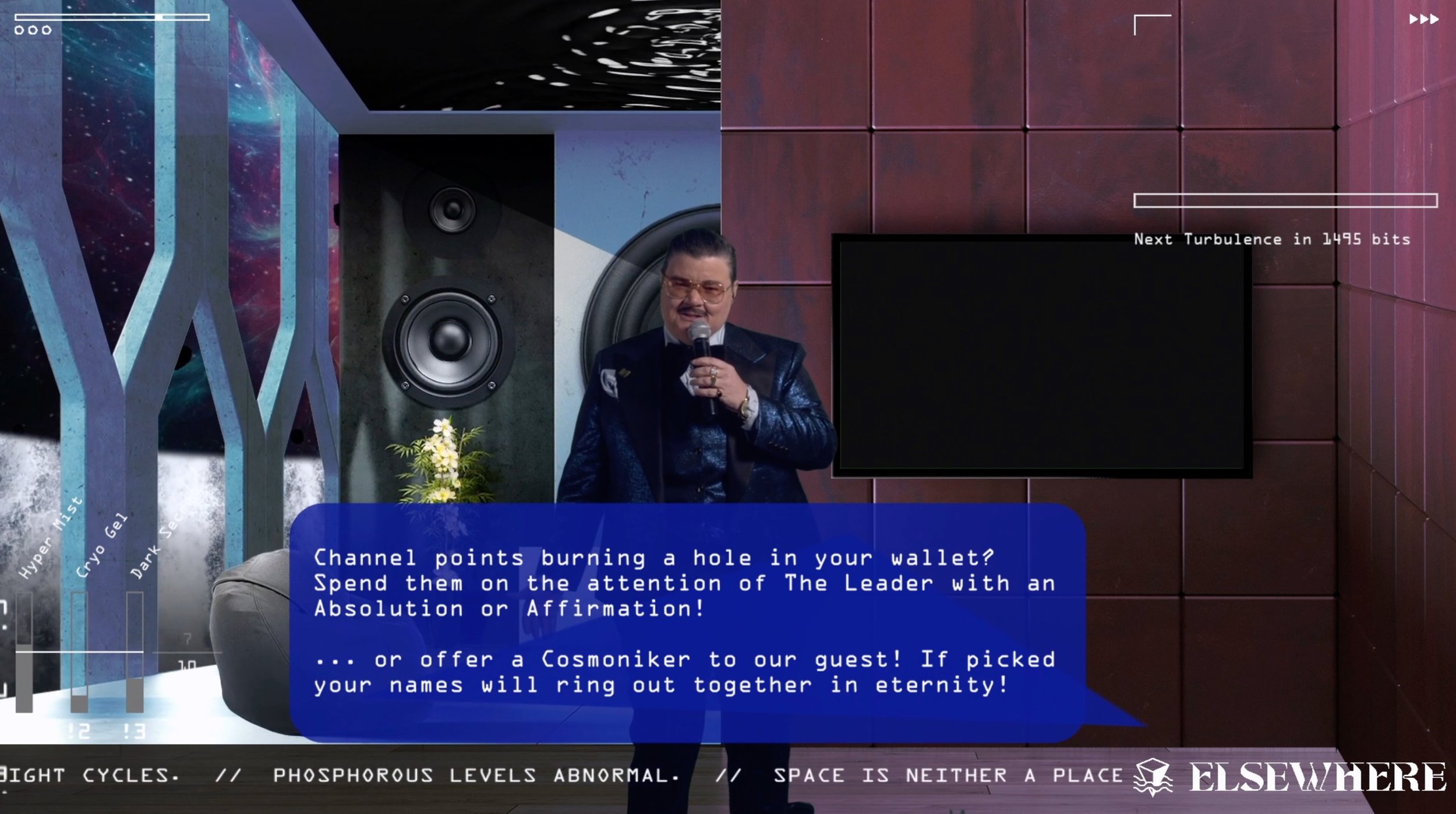

Absolution and Affirmations

The two most show-stopping interactions a single user could trigger were the Absolution and the Affirmation. Users could only invoke these effects by spending a large amount of 'channel points', a kind of loyalty points Twitch viewers accrue on a channel-specific basis. This is a currency the channel administrators can choose if and how to allow the viewer to redeem, and for what.

In our case, viewers could spend a large number of channel points - an amount it would take a very active participant almost an entire episode's worth of engagement to accrue - in order to purchase from the leader an Absolution or Affirmation. This was deliberately priced at a level that was only attainable by serious fans who were willing to invest a lot of their slowly-earned channel points into one big action.

The Affirmation was conceived as a sincere response to the psychic turbulence of the real-world moment, intended as a beat where the Leader, speaking directly to the viewer, calling the viewer out by username and looking directly into the camera, would tell them that they are OK - that they are good enough the way they are, and that they are loved, filtered through an intense, throbbing color LUT and a tunnel-vision blur effect.

The Absolution was a similar gag, although delivered in a more tongue-in-cheek fashion, in the style of a megachurch preacher, where the viewer would purchase absolution for a specific sin they described at the time of purchase.

In both cases, the actor playing the Leader would see the username and the Affirmation or Absolution (and the sin requiring Absolution) waiting in a queue on their HUD screen, and as soon as they could get to it, they'd begin the acknowledgement and the TD would trigger the effect let the moment take over the show.

In addition to verbal exposition from the host and reminders from the moderators in the chat, occasional onscreen tips would guide users to purchase Absolutions and Affirmations.

An Absolution for viewer seansatomusic

Wrap

There’s more to cover but we hope you get the idea of how we applied the framework to our strategy in a way that enabled as many viewers as possible to experience the frisson of having the TV talk to them while still running a (barely) coherent show.

If you’d like to read more about the production, check out our case study here. If you’d like to see us make more Elsewhere Sound Space, help us find a sponsor!! Seriously, we need a sponsor.

If you’d like to read more about the tech behind the production, we did a couple of long-form interviews on the topic and the videos are linked in this blog post.

If you’d like to make another show like this with us, reach out. We’d love to spend all our time making this show or something equally wild and ground-breaking.

ELSEWHERE SOUND SPACE TECH INTERVIEWS 2021

In the past months I’ve had the opportunity to talk about the software behind Elsewhere Sound Space from two different angles in interviews now up on YouTube.

For TouchDesigner InSession with Derivative founder Greg Hermanovic, technical director Markus Heckmann, and director of community Isabelle Rousset, I talked about the creative process of building the dozens of shots and interactive music videos that went into the show.

It’s always fun to talk with the Derivative folks — while as the creators they know the software better than anyone, they retain an intense, almost childlike curiosity about the ways in which their program is used. It didn’t hurt that they’re big fans of the show.

With the Interactive and Immersive HQ’s boss Elburz Sorkhabi, the conversation was focused on the use of Python in TouchDesigner to enable a modular architecture — a design tenet that was critical to keeping the growth of the show platform creative and loose, even when making new show elements in the moments before we went live.

Burz and I disagree about some of the methods we discuss in this interview so it might be interesting for some viewers to hear a debate over architectural style for a framework like TD.

INTERACTIVE IMMERSIVE HQ INTERVIEW 2020

If you're interested in installation artwork, the business of creating permanent digital artworks, Platonic solids, TouchDesigner, UV mapping, unwrapped rendering, LED sculpture, or all of the above, you might enjoy this.

Elburz Sorkhabi is a great host and we're talking about the Rosetta Hall installation, which is yet to be documented on the site (sneak peek!)

PSST.ONE INTERVIEW: BERLIN 2018

When in Berlin for the TouchDesigner Summit and very very food poisoned (thanks Elburz), I did an interview with the very funny and incisive Andrea from psst.one.

This edit heavily features clips from a 2016 month of daily visual experiments I created and documented in one hour each, which is funny to see alongside me being all serious about my ‘work’. Thanks Andrea for making me look relatively coherent and for the nice lighting.