Below I’ll make the argument that current state of augmented reality is much like that of the early internet, and that the same issues of balkanization and semantic discord that confronted the early web are standing in the way of AR’s potentially transformative adoption. I’ll also try to address some of the pitfalls in the possible near-term resolutions to these issues.

LIFX CIRCADIAN DAEMON

As mentioned here in a previous post about circadian-adaptive lighting, I've been searching for a user-friendly way to use off-the-shelf RGBW LED lighting in the home to reinforce healthy circadian rhythms for some time now.

After doing a bit of research, talking with engineers from the CLA / Philips / Zigbee camp, and some digging around in the LIFX developer forums, I eventually decided I had to roll my own.

Running the controller is pretty simple - users edit a JSON LUT that describes an arbitrary number of scenes. Each scene gets a name, start time, a HSB value, and a white point. The system, once daily, pulls that LUT in (daily in case you've changed it since you ran the daemon), and localizes it based on the latitude and longitude you've provided.

If you set the 'extended-sunlight-mode' flag to True, the system will move your scenes named 'sunrise', 'sunrise-end', 'noon', 'sunset', and 'twilight' to the times they would occur on the longest day of the year (June 21-ish) for your location - that's crucial for people who experience the winter blues or just like to keep a regular schedule year-round.

With the flag set to False, those events (if they exist in your LUT) will be localized to match the outside lighting conditions.

Here's the LUT I've been running:

{

"states":[

{"name":"overnight", "start":0.0, "hue":0, "sat":1.0, "bright":0.02, "kelvin":2500},

{"name":"overnight-end", "start":0.20, "hue":0, "sat":1.0, "bright":0.02, "kelvin":2500},

{"name":"sunrise", "start":0.3125, "hue":30, "sat":1.0, "bright":0.65, "kelvin":2800},

{"name":"sunrise-end", "start":0.3535, "hue":30, "sat":0.6, "bright":0.7, "kelvin":3300},

{"name":"mid-morn", "start":0.4166, "hue":30, "sat":0, "bright":0.8, "kelvin":4000},

{"name":"noon", "start":0.5416, "hue":30, "sat":0, "bright":1.0, "kelvin":5500},

{"name":"mid-day-end", "start":0.7016, "hue":30, "sat":0, "bright":1.0, "kelvin":5500},

{"name":"sunset", "start":0.8125, "hue":50, "sat":1.0, "bright":0.55, "kelvin":5500},

{"name":"bed", "start":0.9375, "hue":20, "sat":1.0, "bright":0.05, "kelvin":2500}

],

"lat":40.677459,

"long":-73.963367,

"extended-sunlight-mode":"True"

}The system is designed to be daemonized — I'm running it on an RPi. It hosts a bootstrap controller at port 7777 from which you can switch the lights on and off without interrupting the current transition. (Unfortunately, the current implementation of the LIFX HTTP API lacks the ability to put a power state change without interrupting a non-power-related put in progress.)

I plan to add a 'bypass' toggle to the controller that allows the user to manually control the lights again, and perhaps implement something to let you enumerate the lights you want included in the data.json - right now it acts on all LIFX lights connected to the API token you provide.

Next big feature after that is Philips Hue support ...

You can check out the repository here ... please let me know about all the bugs you find and features you want.

3D-PRINTED (and MILLED) LENSES with TUNED CHARACTERISTICS

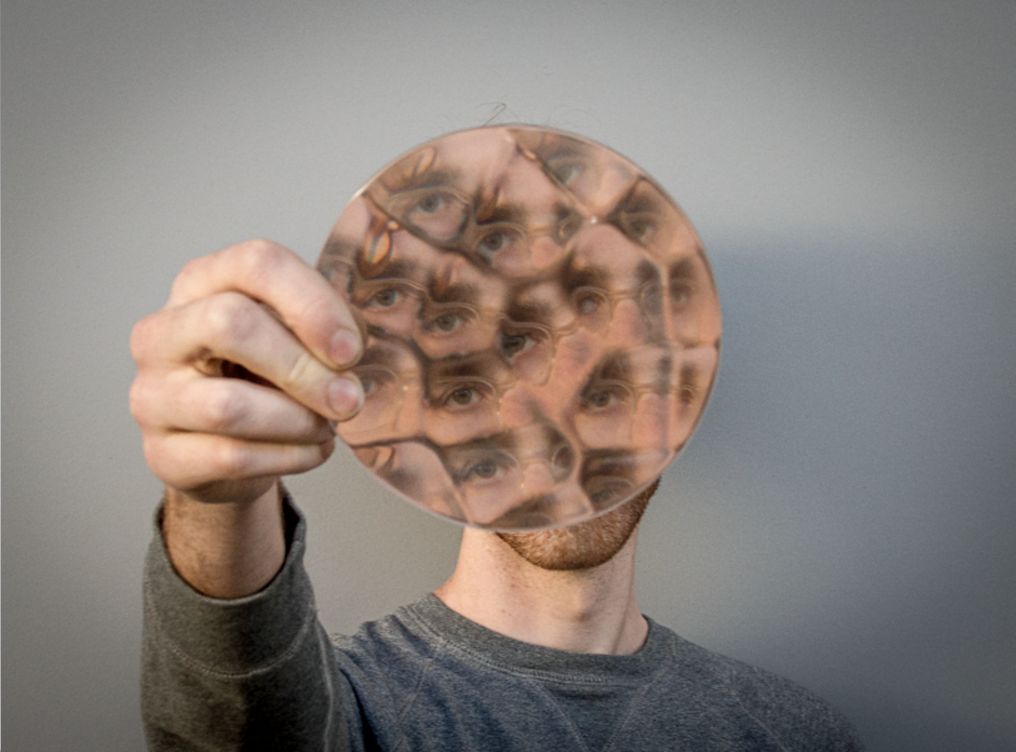

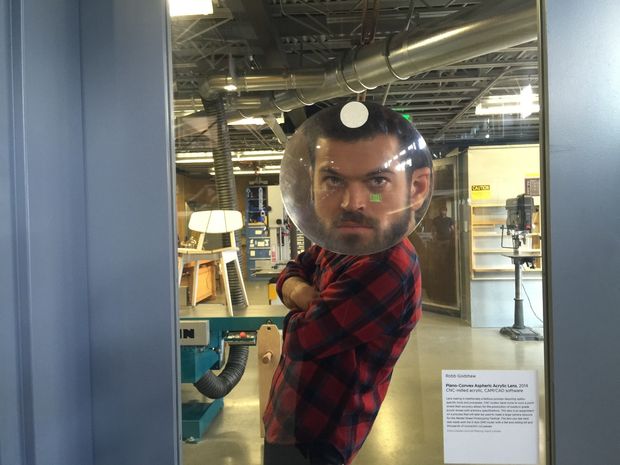

Creative Applications has a writeup of Robb Godshaw's process for using Rhino with Neon raytracing and T-Splines for freeform surfaces to more or less eyeball brute-reverse the Mac Photobooth warp filters as lenses (and some sunglasses), then fabbing on the photosensitive-resin-medium printer Objet Connex 500 (fed with VeroClear) or on a 3-axis CNC mill (the preferred, albeit more limiting route).

Check the video for a quick breakdown of the process and some of the objects produced in the workflow, or click through to the Instructable for the finer points and deep cuts like this introduction to Hamiltonian Optics.

Robb mentions using this process to create a custom projection lens for his Lunar Persistence Apparatus robotic projector ... intriguing for lower-resolution applications of projection-as-lighting rather than high-resolution digital imagery.

3D-PRINTED ZOETROPES

This process has been a pet pitch of mine for a while but nobody’s biting yet — 3D printing makes a ton of sense for zoetropes, not just smaller ones like in this example but for large, character-driven, intricate scenes.

What’s great about this approach is you can pre-vis everything (incl rotation and frame rate, etc.), tweak and tweak, and when it’s ready, print each component, draw out your positioning on your turntable, stick them down, and then tune your motor / strobe / camera system without having to re-hand-sculpt in the event of a mixup.

Anyway, Edmark is doing Python > Rhino > STL for forms following the 137.5-degree-distribution [aka the Golden Angle] and Fibonacci sequences common in plants, which is exactly the sort of arguably completely new thing 3D printing is bringing into the world.

I strongly recommend checking out Vi Hart's videos linked above, BTW — here's an embed in case you missed it:

... also as part of Edmark's awesome Instructables breakdown of the process is this beautiful and didactic Fibonacci puzzle stop-motion:

Also worth mentioning is this really lovely zoetrope record project by UK-based AV outfit Sculpture:

--- UPDATE ---

More on this via EK at HUSH ...

Nervous System have been making their own 3D-printed zoetropes in a series titled 'GROWING OBJECTS'. They're created with generative growth systems (Nervous' wheelhouse) and prototyped as GIF ... this one stands out from their pre-vis collection...

... and here's some documentation of the finished pieces:

Update 05/17/15:

More from Creators Project - this one from Mat Colishaw - a depiction of Reubens' Massacre of the Innocents, way more in the wheelhouse of what I've had in mind:

... love how the physical setting of this one reinforces the theme. Really amazing work, and some interesting comments on the Vimeo page from one of the 3D artists involved regarding the challenges of documenting a piece like this - because the camera shutter wasn't synced with the stroboscope, he had to go through and manually select well-lit frames for inclusion in this video. There's gotta be a better way to do that, but maybe not without a good bit of additional work jamming the camera with the strobe, or without using a variable frame rate body like a Phantom...

ADAPTIVE LIGHTING

One of my goals at CES this year was to build a better understanding of the C&C flow behind the home automation protocols showing there (Zigbee/CLA, Z-Wave, HomeKit-based solutions, Bluetooth-hybrid mesh, etc.) in order to suss out the feasibility of home-rolling a circadian-adaptive lighting scheduler driven by a simple switch / dimmer interface (Lutron showed something like this at CES this year but for Zigbee).

This, to me, is the real use case for RGB(W) addressable lighting, not blinking when a sports team wins or for psychedelic 'lava lamp' looks, but this arguably more practical use gets about 1% of the ink that more frivolous applications do, even from the early adopters and reviewers in the industry that jump in hard on this tech.

What I want is a single on/off or dimmer hardware controller that can query a color and intensity lookup table (with a few more inputs, taking into account geolocation and time of year,) to determine the appropriate shade of white (see table below) at that given moment, then tell an arbitrary group of lights what to do. Oh, and I don't want it to be PC-based, 'cloud'-based, or outside my LAN.

Long story short, that's a non-trivial project but ongoing research [Lexar Lighting] indicates it’s worthwhile to consider an approach that approximates something like this in your own home/office, even if it is a pain in the ass to run it for the time being (currently, I’m manually bumping down a color table throughout the day in the LIFX iOS controller*)….

For something applicable to users of LIFX, Hue, or similar:

http://blog.lifx.co/2014/04/09/the-lighter-side-of-circadian-rhythms/

Image from Lexar Lighting

* Not an implicit endorsement for LIFX - despite the hardware having lighting capabilities that handily outstrip what the Hue system can do, the product is half-finished and mine are dying a slow heat death already. I’m not the only one, too — I'd say go Hue for now ... I might when these LIFX finally kick the bucket.

BLUE LIGHT WHEN YOU NEED IT

The research mentioned in the Lexar blog refers to blue light exposure in the 470-525nm range, and while those frequencies may be present in broader-spectrum (“full-spectrum”) incandescents, the discrete nature of LED lighting frequency output doesn’t guarantee sufficient intensities at those wavelengths, even when the light is set to a blue that appears to be in the neighborhood. The incandescents, on the other hand, are too broad-spectrum to be useful in this regard, as they’d need something like 10,000 lux output (uncomfortable to be around), indicating something more specialized.

If you really want to use blue light exposure as part of a bio-adaptive lighting plan or to combat seasonal affective disorder (the “winter blues”), I like the Phillips BLU energy lights …. (there are a few but this one is stripped down to just a brick of ~480nm lights, and, for the time being, not controllable outside its single button.)

… I’ve been using one of these seasonally for the last 4 or so years and, anecdotally, I think it helps in avoiding a premature melatonin response (crashing, or the ’nap reflex') during the deepest darkest winter afternoons here in Brooklyn. That and coffee do the job nicely.

On a related note, my friend Mark (his project Spireworks is germane to the ongoing wrestling he and I have been doing with spatial approaches to lighting control) tipped me to this broad software approach [PDF] from amBX, a Philips R&D spinoff (last Philips namecheck, I promise) ... but I'll be damned if I'm going to have a PC OS behind my home lighting ... although maybe that's the root of my problem here.

... NOT WHEN YOU DON'T

You may have seen advice on limiting screen usage late at night for sleep reasons. Part of the logic there is to minimize stimulation right before going to bed, but another important bit is related to the light exposure topic at hand. The 'white' backlight in most modern LCD devices is a blue LED, yellow (phosphor)-coated to produce something more like white, and it is poorly filtered by your LCD screen, meaning you're pumping blue light into your eyes, right before bed.

I use f.lux on my Mac to address this, and I'd put it on my phone if I were the jailbreaking type. f.lux, if you're not familiar, applies an amber color profile, system-wide, any time the sun is down.

I was at first put off by the dying-fluorescent look of f.lux doing its thing late at night — it's got a decidedly 'burned' look. As someone who (somewhat excessively) calibrates monitors, I at first found trying to do anything image-related pretty frustrating after sundown, but Mark's comment on this made a believer out of me — he said, 'it reminds me that I probably shouldn't be using the computer then anyway'. Monitor tint as behavioral modification feedback. I like it.

The reality is that sometimes you need to work late, and for that, f.lux offers an option to temporarily disable the effect for a given app or until sunrise, and in so doing, consciously commit yourself to some light exposure that is likely to keep you up later.

UPDATE: 2/13/15

Startup lighting outfit Sunn look like they're off to a good start with their round, diffuse, sun-synced LED array. This is a 19" or 24" round wall- or ceiling-mounted fixture with C&C protocol TBD that claims LIFX and Hue integration via their companion smartphone app.

These are still a ways off from v2.5 aka safe waters and I'm once bitten already, but if you're feeling generous, my birthday is in April and Hannukah is generally in December. FYI.

And while we're on the topic of things to buy me ... it's not adaptive per se but damn do I want one of these Coelux artificial skylights:

Similarly, this solar-powered reflector design, Lucy, while very much in the 'preorder while we design this thing' phase, makes a lot of sense for 'sun-shifting' in configurations where natural light is available but is too indirect to be used as illumination.

... like many crowdfunded, or preorder-driven business models, much remains to be seen about the quality of the implementation, but I love this idea.

UPDATE: 7/29/16

I've been following the progress of adaptive lighting startup Svet for a while now ... they've got a Zigbee / BLE, 1000 lumen, 1800K-8000K, high CRI (90-96) A21 or BR30 bulb on pre-order, with (the now usual) options for Android and iOS control, but the new part is the ability to configure individual lamps with specific brightness and color breakpoints throughout the day.

I very much like the idea of offloading the configuration into the lamp and then being able to use the wall switch to control on/off without interrupting the settings, although the usual 'IoS' caveats apply - if the company goes out of business or stops supporting the app of your choice, you're stuck with dumb $70 lightbulb, or, perhaps worse, a bulb with a nonsense setting that you can't change any longer.

UPDATE: 7/29/16

LIFX recently announced a line of 'Day to Dusk' bulbs, while simultaneously rolling out the same 'Day to Dusk' capability through their iOS app for existing bulbs. The new lamps are 800lm and 1500-4000K, a bit less bright than the full 1100lm LIFX lamps and with much more limited capabilities in both whites and colors.

The Day to Dusk feature is a 4-breakpoint curve a bit like f.lux's capability — the events are 'Wake Up', 'Day', 'Evening' , and 'Night Light'. You currently can't customize the settings for each breakpoint - only the time they occur, although LIFX support tells me customization is in the works. You also can't add breakpoints - only disable one or more.

I'm currently testing this on a mix of LIFX lamps and I'll follow up when I have a more of an opinion. Reserving judgement at the moment as the feature is very new.

UPDATE: 09/27/22

Light Cognitive have what looks like a Coelux-lite offering for the ‘bespoke’ set. I say ‘bespoke’ because they use the word as often as possible when describing the product.

WWD LIGHT WORK

In less than two weeks from brief to delivery, we made this — a 280-channel rig that runs an hour-long, audio-reactive, dynamic program of custom looks.

It's made to be integrated into an armature behind a massive diffusion structure lofted above the audience in an inflatable temporary structure at South Street Seaport for WWD's '10 of Tomorrow' event.

Ancillary Magnet testing documentation on LED and software rig for WWD's Ten of Tomorrow event with Industria Creative. This video is annoyingly horizontal because the piece is 14' long. Deal with it.

SOME VIDEO of our GLASSLANDS INSTALLATION

If you like tubes, you're in luck.

For more on this project, check out the case study.

Additionally, if you want to get even wonkier, check out this video quickstart guide for the software that drives the installation. If that's not enough, use the contact page to reach out for the manual or for a copy of the software to drive your own 300-channel tube installation.

SMITHSONIAN TRIPTYCH

We're just now wrapping up a design and install project with the Smithsonian Freer and Sackler Galleries including this boss triptych projection and playback system.

The exhibit, which details the exploits of Wendell Phillips, a kind of real-life Indiana Jones, opens October 11. Get your artifact on!

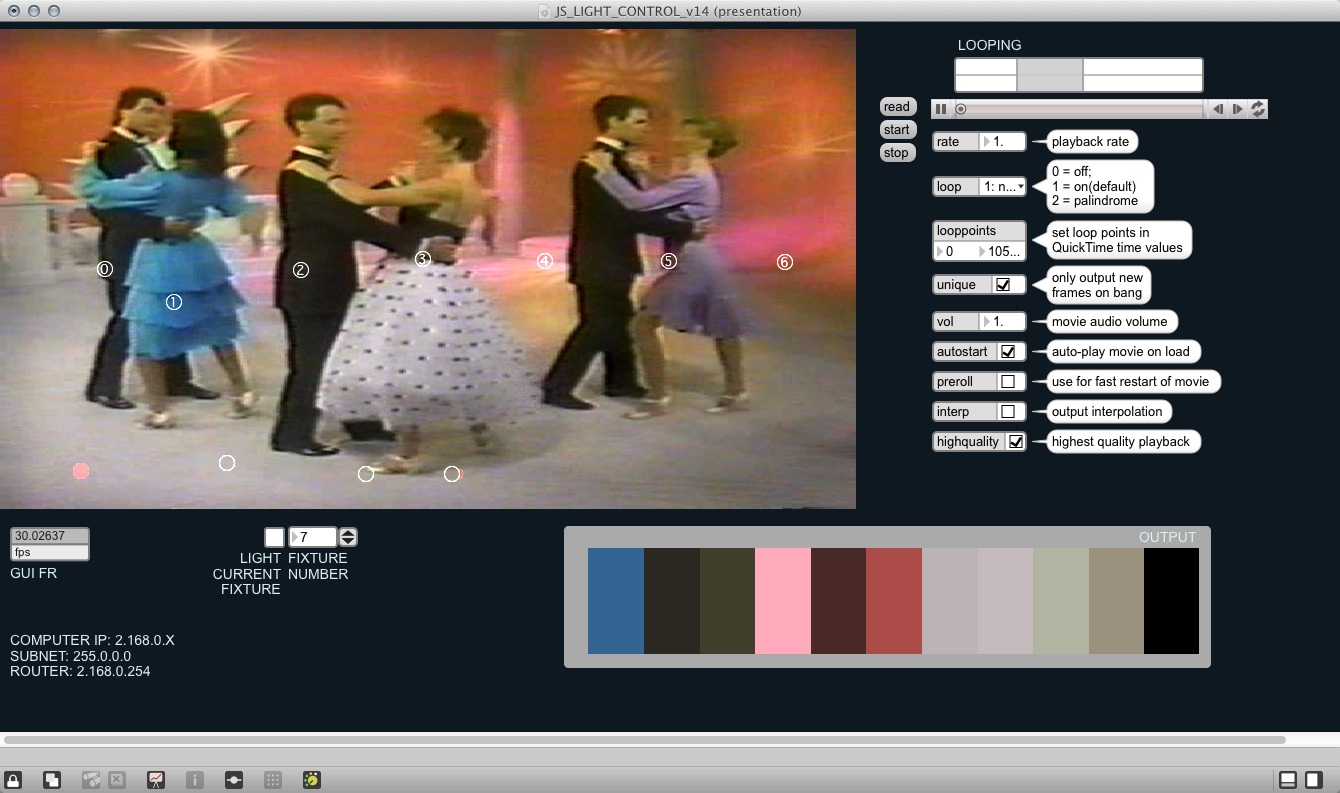

JS/FOURSQUARE: REALTIME LIGHT CONTROL FROM VIDEO ANALYSIS

Our friends at JaegerSloan recently came looking for a way to dynamically light a greenscreen shoot using realtime color information from the background video. With a system like that, they figured, they could match lighting without having to do much in post. It was a good idea.

The content was a Foursquare spot, the foreground subject was a hand holding a smartphone, and the composited background was all stock footage.

To make this happen, I whipped up a simple system allows the user to click points on the background video to define an arbitrary number of pixels from which to sample color. It then pipes that color information out to an arbitrary number of DMX lights.

Included in the interface is a preview of what's going out to each light as well as simple transport and looping controls for video playback.

Shutterstock did a little writeup -- check the video see a peek of it in action below.

Pick some color points by clicking on a source video (top left), preview the resultant output to your lights (bottom right) ...

... you get realtime color control to any RGB DMX light.

One, two, cha-cha-cha, etc.

LYVE at CES 2014

LEDs rear their shiny heads again in this HUSH installation for the formerly stealth, now public Lyve Home data management device.

The installation was a storytelling tool to be used as a part of private presentations in a suite space in the Venetian hotel, a space that came with a number of constraints to address. Nothing could be taller than 6', nor affixed to the walls or ceiling. Very limited power was available. Few things in the small room could be moved. Shipping drayage was charged by weight. Those and about 130 pages of other things you don't want to hear about.

With all these (thoroughly-researched) constraints in mind, we helped the HUSH team come up with an immersive display that allowed a docent to control playback of video content coordinated with LED animations within translucent plinths. The animation shows personal content (images, videos, etc.) originating in one device, which then metaphorically (and visually) fragment and makes its way onto all of the synchronized devices, illustrated by the movement of color onscreen and through the plinths.